From Wikipedia, the free encyclopedia

| Toba catastrophe theory | |

|---|---|

Illustration of what the eruption might have looked like from approximately 26 miles (42 km) above Pulau Simeulue.

|

|

| Volcano | Toba supervolcano |

| Date | 69,000–77,000 years ago |

| Type | Ultra Plinian |

| Location | Sumatra, Indonesia 2.6845°N 98.8756°ECoordinates: 2.6845°N 98.8756°E |

| VEI | 8.3 |

| Impact | Most recent supereruption; plunged Earth into 6 years of volcanic winter, possibly causing a bottleneck in human evolution and significant changes to regional topography.[1][dated info] |

Lake Toba is the resulting crater lake.

|

|

The Toba event [3][4] is the most closely studied super-eruption.[5] In 1993, science journalist Ann Gibbons suggested a link between the eruption and a bottleneck in human evolution, and Michael R. Rampino of New York University and Stephen Self of the University of Hawaii at Manoa gave support to the idea. In 1998, the bottleneck theory was further developed by Stanley H. Ambrose of the University of Illinois at Urbana-Champaign.

Contents

Supereruption

The Toba eruption or Toba event occurred at the present location of Lake Toba about 73,000±4,000 years[6][7] ago. This eruption was the last of the three major eruptions of Toba in the last 1 million years.[8] It had an estimated Volcanic Explosivity Index of 8 (described as "mega-colossal"), or magnitude ≥ M8; it made a sizable contribution to the 100X30 km caldera complex.[9] Dense-rock equivalent (DRE) estimates of eruptive volume for the eruption vary between 2,000 km3 and 3,000 km3 – the most common DRE estimate is 2,800 km3 (about 7×1015 kg) of erupted magma, of which 800 km3 was deposited as ash fall.[10] Its erupted mass was 100 times greater than that of the largest volcanic eruption in recent history, the 1815 eruption of Mount Tambora in Indonesia, which caused the 1816 "Year Without a Summer" in the northern hemisphere.[11]The Toba eruption took place in Indonesia and deposited an ash layer approximately 15 centimetres thick over the whole of South Asia. A blanket of volcanic ash was also deposited over the Indian Ocean, and the Arabian and South China Sea.[12] Deep-sea cores retrieved from the South China Sea have extended the known reach of the eruption, suggesting that the 2,800 km3 calculation of the erupted mass is a minimum value or an underestimate.[13]

Volcanic winter and cooling

The Toba eruption apparently coincided with the onset of the last glacial period. Michael L. Rampino and Stephen Self argue that the eruption caused a "brief, dramatic cooling or 'volcanic winter'", which resulted in a drop of the global mean surface temperature by 3–5 °C and accelerated the transition from warm to cold temperatures of the last glacial cycle.[14] Evidence from Greenland ice cores indicates a 1,000-year period of low δ18O and increased dust deposition immediately following the eruption. The eruption may have caused this 1,000-year period of cooler temperatures (stadial), two centuries of which could be accounted for by the persistence of the Toba stratospheric loading.[15] Rampino and Self believe that global cooling was already underway at the time of the eruption, but that the process was slow; YTT "may have provided the extra 'kick' that caused the climate system to switch from warm to cold states".[16] Although Clive Oppenheimer rejects the hypothesis that the eruption triggered the last glaciation,[17] he agrees that it may have been responsible for a millennium of cool climate prior to the Dansgaard-Oeschger event.[18]According to Alan Robock,[19] who has also published nuclear winter papers, the Toba eruption did not precipitate the last glacial period. However assuming an emission of six billion tons of sulphur dioxide, his computer simulations concluded that a maximum global cooling of approximately 15 °C occurred for three years after the eruption, and that this cooling would last for decades, being devastating to life. As the saturated adiabatic lapse rate is 4.9 °C/1,000 m for temperatures above freezing,[20] the tree line and the snow line were around 3,000 m (9,900 ft) lower at this time. The climate recovered over a few decades, and Robock found no evidence that the 1,000-year cold period seen in Greenland ice core records had resulted from the Toba eruption. In contrast, Oppenheimer believes that estimates of a drop in surface temperature by 3–5 °C are probably too high, and he suggests that temperatures dropped only by 1 °C.[21] Robock has criticized Oppenheimer's analysis, arguing that it is based on simplistic T-forcing relationships.[22]

Despite these different estimates, scientists agree that a supereruption of the scale at Toba must have led to very extensive ash-fall layers and injection of noxious gases into the atmosphere, with worldwide effects on climate and weather.[23] In addition, the Greenland ice core data display an abrupt climate change around this time,[24] but there is no consensus that the eruption directly generated the 1,000-year cold period seen in Greenland or triggered the last glaciation.[25]

Archaeologists who in 2013 found a microscopic layer of glassy volcanic ash in sediments of Lake Malawi, and definitively linked the ash to the 75,000-year-old Toba super-eruption, went on to note a complete absence of finding the change in fossil type close to the ash layer that would be expected following a severe volcanic winter. This result led the archaeologists to conclude that the largest known volcanic eruption in the history of the human species did not significantly alter the climate of East Africa.[26][27]

Genetic bottleneck theory

The Toba eruption has been linked to a genetic bottleneck in human evolution about 50,000 years ago,[28][29] which may have resulted from a severe reduction in the size of the total human population due to the effects of the eruption on the global climate.[30]According to the genetic bottleneck theory, between 50,000 and 100,000 years ago, human populations sharply decreased to 3,000–10,000 surviving individuals.[31][32] It is supported by genetic evidence suggesting that today's humans are descended from a very small population of between 1,000 to 10,000 breeding pairs that existed about 70,000 years ago.[33]

Proponents of the genetic bottleneck theory suggest that the Toba eruption resulted in a global ecological disaster, including destruction of vegetation along with severe drought in the tropical rainforest belt and in monsoonal regions. For example, a 10-year volcanic winter triggered by the eruption could have largely destroyed the food sources of humans and caused a severe reduction in population sizes.[22] Τhese environmental changes may have generated population bottlenecks in many species, including hominids;[34] this in turn may have accelerated differentiation from within the smaller human population. Therefore, the genetic differences among modern humans may reflect changes within the last 70,000 years, rather than gradual differentiation over millions of years.[35]

Other research has cast doubt on the genetic bottleneck theory. For example, ancient stone tools in southern India were found above and below a thick layer of ash from the Toba eruption and were very similar across these layers, suggesting that the dust clouds from the eruption did not wipe out this local population.[36][37][38] Additional archaeological evidence from southern and northern India also suggests a lack of evidence for effects of the eruption on local populations, leading the authors of the study to conclude, "many forms of life survived the supereruption, contrary to other research which has suggested significant animal extinctions and genetic bottlenecks".[39] However, evidence from pollen analysis has suggested prolonged deforestation in South Asia, and some researchers have suggested that the Toba eruption may have forced humans to adopt new adaptive strategies, which may have permitted them to replace Neanderthals and "other archaic human species".[40] This has been challenged by evidence for the presence of Neanderthals in Europe and Homo floresiensis in Southeastern Asia who survived the eruption by 50,000 and 60,000 years, respectively.[41]

Additional caveats to the Toba-induced bottleneck theory include difficulties in estimating the global and regional climatic impacts of the eruption and lack of conclusive evidence for the eruption preceding the bottleneck.[42] Furthermore, genetic analysis of Alu sequences across the entire human genome has shown that the effective human population size was less than 26,000 at 1.2 million years ago; possible explanations for the low population size of human ancestors may include repeated population bottlenecks or periodic replacement events from competing Homo subspecies.[43]

Genetic bottlenecks in humans

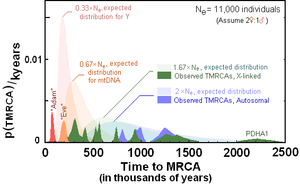

The Toba catastrophe theory suggests that a bottleneck of the human population occurred c. 70,000 years ago, reducing the total human population to c. 15,000 individuals[44] when Toba erupted and triggered a major environmental change, including a volcanic winter. The theory is based on geological evidence for sudden climate change at that time and for coalescence of some genes (including mitochondrial DNA, Y-chromosome and some nuclear genes)[45] as well as the relatively low level of genetic variation among present-day humans.[44] For example, according to one hypothesis, human mitochondrial DNA (which is maternally inherited) and Y chromosome DNA (paternally inherited) coalesce at around 140,000 and 60,000 years ago, respectively. This suggests that the female line ancestry of all present-day humans traces back to a single female (Mitochondrial Eve) at around 140,000 years ago, and the male line to a single male (Y-chromosomal Adam) at 60,000 to 90,000 years ago.[46]However, such coalescence is genetically expected and does not necessarily indicate a population bottleneck because mitochondrial DNA and Y-chromosome DNA are only a small part of the human genome, and are atypical in that they are inherited exclusively through the mother or through the father, respectively. Most genes are inherited randomly from either the father or mother, thus cannot be traced to either matrilineal or patrilineal ancestry.[47] Other genes display coalescence points from 2 million to 60,000 years ago, thus casting doubt on the existence of recent and strong bottlenecks.[44][48]

Other possible explanations for limited genetic variation among today's humans include a transplanting model or "long bottleneck", rather than a catastrophic environmental change.[49] This would be consistent with suggestions that in sub-Saharan Africa human populations dropped to as low as 2,000 individuals for perhaps as long as 100,000 years, before numbers began to increase in the Late Stone Age.[50]

TMRCAs of loci, Y chromosome, and mitogenomes

compared to their probability distributions, assuming that the human

population expanded 75kya from a population of 11,000 individuals

Genetic bottlenecks in other mammals

Some evidence points to genetic bottlenecks in other animals in the wake of the Toba eruption: the populations of the Eastern African chimpanzee,[53] Bornean orangutan,[54] central Indian macaque,[55] the cheetah, the tiger,[56] and the separation of the nuclear gene pools of eastern and western lowland gorillas,[57] all recovered from very low numbers around 70,000–55,000 years ago.Migration after Toba

The exact geographic distribution of human populations at the time of the eruption is not known, and surviving populations may have lived in Africa and subsequently migrated to other parts of the world. Analyses of mitochondrial DNA have estimated that the major migration from Africa occurred 60,000–70,000 years ago,[58] consistent with dating of the Toba eruption to around 66,000–76,000 years ago.However, recent archeological finds have suggested that a human population may have survived in Jwalapuram, Southern India.[59] Moreover, it has also been suggested that nearby hominid populations, such as Homo floresiensis on Flores, survived because they lived upwind of Toba.[60]