http://nextbigfuture.com/

May 24, 2013The overnight cost of a fission power plant is ~ $4/W ($2/W for China).

• First of kind fusion plants at least $10-20/W

• Which implies that developing fusion reactors at ~GWe scale requires 10-20 G$ “per try” e.g. ITER

• Chance of fusion development significantly improved if net thermal/electrical power produced at ~5-10 x smaller i.e. ~ 500 MW thermal

It would be good to have a more reasonable Tokamak fusion option.

I still like John Slough's fusion reactor designs more. He could have net gain this year or next year. It looks even cheaper and faster to develop.

The Lawrenceville Plasma Physics dense plasma focus fusion also seems to have chance to lower energy costs by ten times while Tokomaks are trying to not be two to ten times more expensive.

General fusion also seems more promising.

Also, any nuclear fusion option needs to be better than improved nuclear fission.

Molten salt nuclear fission looks to greatly reduce the waste and have improved costs.

Canadian David LeBlanc is developing the Integral Molten Salt Reactor, or IMSR. The goal is to commercialize the Terrestrial reactor by 2021. It should have initial costs of $3.5/W and could have costs that are $1/W.

Fast-Acting Nuclear Reactor Will Power Through Piles of Plutonium

Even the latest generation of nuclear power reactors can only harvest about five percent of the energy stored in their radioactive fuel supplies, and the toxic leftovers must then be buried deep underground to slowly decay over hundreds of thousands of years. But thanks to a new breed of sodium-cooled pool reactor, we may soon be able to draw nearly 100 times more energy from nuclear fuels, while slashing their half-lives by two orders of magnitude.

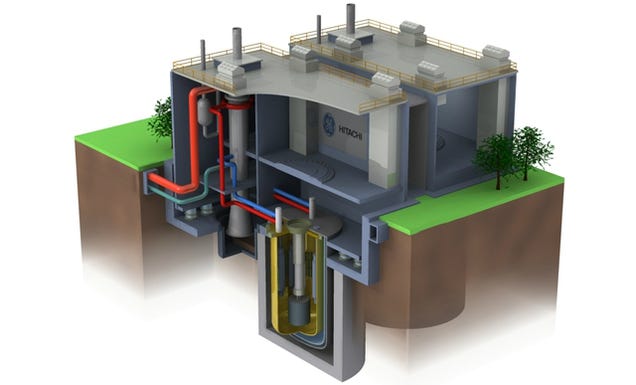

The PRISM

reactor (that's Power Reactor Innovative Small Module, not the NSA's spy

program supreme) is the result of more than 60 years of research by the

DoE, Argonne National Lab, and General Electric. Like other existing

reactors, the PRISM harnesses the radioactive energy of artificial

elements like plutonium to drive turbines which generate electrical

current. The PRISM will just do so way, way faster and much more efficiently. Assuming, of course, one ever actually gets built.

In fact,

according to GE's Eric Lowen, it should—theoretically at least—be able

to extract 99 times as much energy from a given unit of uranium than any

reactor currently in use. This is due to the system's use of liquid

sodium as its coolant, rather than water. The liquid sodium doesn't suck

as much energy from radiating neutrons as water does and that extra

energy in turn creates a more efficient fission reaction. And not only

does this generate more electrical power per unit of fuel, it

drastically reduces the half-life of the remainder—only about 300 years,

down from the 300,000 years of conventional nuclear waste. The reactor

itself will produce an expected 311 MW of electricity, though they will

generally operate in 622 MW pairs over their 60 year service lives.

Six

decades really isn't that long but these reactors are so efficient that

they could, again in theory at least, consume the entirety of Great

Britain's nuclear stockpile over that duration. Heck, the 140 metric ton

stockpile in the town of Sellafield, along England's northern coast,

alone could be used to power the whole of Britain for 100 years with a

fraction of the waste that conventional reactors produce.

We've got a

while before that estimate can be confirmed, however. The UK Nuclear

Decommissioning Authority is currently reviewing PRISM technology along

with a number of other options to reduce, if not eliminate, its nuclear

stockpile. A report released this past January stated that PRSIM "should

also be considered credible, although further investigation may change

this view." Either way, the UK government doesn't appear to be

in much of a hurry to decide—a PRISM reactor, should it be chosen,

wouldn't likely come online until around 2030. [GE Reports - GE 1, 2 - The Engineer - The Ecologist]

Nanocomp’s EMSHIELD sheet material was incorporated into the Juno spacecraft, launched on August 5, 2011, to provide protection against electrostatic discharge (ESD) as the spacecraft makes its way through space to Jupiter and is only one example of many anticipated program insertions for Nanocomp Technologies’ CNT materials.

In a recent Presidential Determination, Nanocomp’s CNT sheet and yarn material has been uniquely named to satisfy this critical gap, and the Company entered into a long-term lease on a 100,000 square foot, high-volume manufacturing facility in Merrimack, N.H., to meet projected production demand.

Sumitomo is expecting to mass produce superconducting motors for buses by 2020.

The UK company Magnifye has developed ways to charge superconductors in a vastly more efficient system. Magnifye has developed a heat engine which converts thermal energy into currents of millions of amps. The thermal energy is used to create a series of magnetic waves which progressively magnetise the superconductor much in the same way a nail can be magnetised by stroking it over a magnet.

Ferropnictide superconductors, i.e., superconductors that contain Fe and As, have superconducting transition temperatures (Tc) up to 56 K and high upper critical fields (Hc2) over 100 Telsa. The high Hc2 means these materials could be used in very high field magnets. Previous studies suggested that polycrystalline samples of these materials could not carry a large superconducting current because grain boundaries reduce the critical current density (Jc). Surprisingly, new results find that the opposite is true for wire made from (Ba0.6K0.4) Fe2As2. This material could enable superconducting magnets at 120 tesla.

Nanocomp Technologies will be supplying carbon nanotube yarn to replace copper in airplanes in 2014

May 10, 2012

Nanocomp Technologies (NTI) lightweight wiring, shielding, heating

and composite structures enhance or replace heavier, more fatigue prone

metal and composite elements to save hundreds of millions in fuel,

while increasing structural, electrical and thermal performance. In INC

Magazine, Nanocomp Technologies indicates that they will selling their

carbon nanotube yarn (CTex) to airplane manufacturers in 2014 to replace

copper wiring.

Nanocomp’s EMSHIELD sheet material was incorporated into the Juno spacecraft, launched on August 5, 2011, to provide protection against electrostatic discharge (ESD) as the spacecraft makes its way through space to Jupiter and is only one example of many anticipated program insertions for Nanocomp Technologies’ CNT materials.

In a recent Presidential Determination, Nanocomp’s CNT sheet and yarn material has been uniquely named to satisfy this critical gap, and the Company entered into a long-term lease on a 100,000 square foot, high-volume manufacturing facility in Merrimack, N.H., to meet projected production demand.

The U.S. Dept. of Defense recognizes that CNT materials are vital to several of its next generation platforms and components, including lightweight body and vehicle armor with superior strength, improved structural components for satellites and aircraft, enhanced shielding on a broad array of military systems from electromagnetic interference (EMI) and directed energy, and lightweight cable and wiring. The Company’s CTex™ CNT yarns and tapes, for example, can reduce the weight of aircraft wire and cable harnesses by as much as 50 percent, resulting in considerable operational cost savings, as well as provide other valuable attributes such as flame resistance and improved reliability.Pure carbon wires carry data and electricity, yarns provide strength and stability

Nanocomp Technologies, Inc., a developer of performance materials and component products from carbon nanotubes (CNTs), in 2011 announced they had been selected by the United States Government, under the Defense Production Act Title III program (“DPA Title III”), to supply CNT yarn and sheet material for the program needs of the Department of Defense, as well as to create a path toward commercialization for civilian industrial use. Nanocomp’s CNT yarn and sheet materials are currently featured within the advanced design programs of several critical DoD and NASA applications.

NTI converts its CNT flow to pure carbon, lightweight wires and yarns with properties that rival copper in data conductivity with reduced weight, increased strength and no corrosion—NTI's wire and yarn products are presently being used both for data conduction and for structural wraps. For contrast: NTI's CNT yarns were tested against copper for fatigue; where copper broke after nearly 14,000 bends, NTI's CNT yarns lasted almost 2.5 million cycles—demonstrating nearly 2,000 times the fracture toughness.

NTI's CNT yarns can be used in an array of applications including: copper wire replacement for aerospace, aviation and automotive; structural yarns, reinforcing matrix for structural composites; antennas; and motor windings.

http://nextbigfuture.com/

January 16, 2013

50 Tesla and Other Superconducting Possibilities

1. Charging superconductors will get a lot more efficient and cost effective

2. Superconducting magnets could achieve 50 tesla in about 5 years

3. Superconducting wire should cost about 4 times less on a price performance basis in three years

4. Superconducting motors should be in a few hundred or a few thousand vehicles by 2020

5. 50 tesla magnets should enable a muon collider in the 2020s

The Department of Energy recently funded Fermilab scientist Tengming Shen $2,500,000 to develop Bi2Sr2CaCu2Ox superconductors. He expects he could use this material to build magnets with a reach of up to 50 Tesla. Shen's magnets could potentially be cooled with a simpler refrigeration unit. The superconducting material that has magnetic field upper limits surpassing 100 Tesla at 4.2 K and can be fabricated into a multifilamentary round wire, to practical magnet conductors that can be used to generate fields above 20 Tesla for the next generation of accelerators.

Studies suggest that reducing the present cost of the superconductor by a factor of two would bring the cost of 10-GW, 1200-mile-long, superconducting cables to within range of that of conventional overhead lines. Since underground dc cables also offer substantial environmental, siting, and aesthetic benefits over conventional overhead transmission lines, they may become an attractive alternative option in some situations. Superpower Inc, is on track to improving price performance of its superconducting wire by 4 times.

2. Superconducting magnets could achieve 50 tesla in about 5 years

3. Superconducting wire should cost about 4 times less on a price performance basis in three years

4. Superconducting motors should be in a few hundred or a few thousand vehicles by 2020

5. 50 tesla magnets should enable a muon collider in the 2020s

The Department of Energy recently funded Fermilab scientist Tengming Shen $2,500,000 to develop Bi2Sr2CaCu2Ox superconductors. He expects he could use this material to build magnets with a reach of up to 50 Tesla. Shen's magnets could potentially be cooled with a simpler refrigeration unit. The superconducting material that has magnetic field upper limits surpassing 100 Tesla at 4.2 K and can be fabricated into a multifilamentary round wire, to practical magnet conductors that can be used to generate fields above 20 Tesla for the next generation of accelerators.

Studies suggest that reducing the present cost of the superconductor by a factor of two would bring the cost of 10-GW, 1200-mile-long, superconducting cables to within range of that of conventional overhead lines. Since underground dc cables also offer substantial environmental, siting, and aesthetic benefits over conventional overhead transmission lines, they may become an attractive alternative option in some situations. Superpower Inc, is on track to improving price performance of its superconducting wire by 4 times.

Sumitomo is expecting to mass produce superconducting motors for buses by 2020.

The UK company Magnifye has developed ways to charge superconductors in a vastly more efficient system. Magnifye has developed a heat engine which converts thermal energy into currents of millions of amps. The thermal energy is used to create a series of magnetic waves which progressively magnetise the superconductor much in the same way a nail can be magnetised by stroking it over a magnet.

Ferropnictide superconductors, i.e., superconductors that contain Fe and As, have superconducting transition temperatures (Tc) up to 56 K and high upper critical fields (Hc2) over 100 Telsa. The high Hc2 means these materials could be used in very high field magnets. Previous studies suggested that polycrystalline samples of these materials could not carry a large superconducting current because grain boundaries reduce the critical current density (Jc). Surprisingly, new results find that the opposite is true for wire made from (Ba0.6K0.4) Fe2As2. This material could enable superconducting magnets at 120 tesla.

Why It's Taking The U.S. So Long To Make Fusion Energy Work

Posted:

Updated:

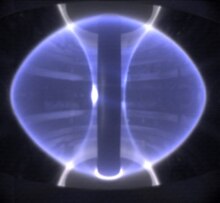

PLAINSBORO,

N.J. -- Hidden in the woods two miles from Princeton University's main

campus sits a drab white building easily mistakable for a warehouse.

Inside is one of the Ivy League school's most expensive experiments: a

22-foot-tall metal spheroid surrounded by Crayola-colored magnets. About

half a dozen blue beams ring the sphere horizontally, while another

set, painted red, rise vertically from the floor to wrap the

contraption, like fingers clutching a ball.

Last fall, construction workers hustled to finish an upgrade to yet another magnet, this one jutting through the center of the sphere like a Roman column. On a recent November afternoon, Michael Williams, the lab's head of engineering, weaved his way through workers and up a stainless steel scaffolding to get a better view.

"Fusion is an expensive science, because you're trying to build a sun in a bottle," Williams said.

This endeavor in the New Jersey woods, known as the National Spherical Torus Experiment, was created to study the physics of plasma, in the hopes that one day humans will be able to harness a new source of energy based on the reactions that power stars. The project has been shut down for two years to undergo an upgrade that will double its power. The improvement costs $94 million, and is paid for -- like the rest of the Princeton Plasma Physics Lab -- by the U.S. Department of Energy.

Impressive as it may appear, this experiment is small compared to what once stood there. Earlier in the day, while walking over to the site from his office, Williams pointed out a sign on the National Spherical Torus Experiment building that read "TFTR." The abbreviation stands for Tokamak Fusion Test Reactor, a bigger, more promising fusion experiment that was scrapped in the mid-1990s.

"I keep telling them to take that down," he said.

The history of the U.S. Department of Energy's magnetic fusion program is littered with half-completed experiments and never-realized ideas. Currently, the most ambitious project in all of fusion work is the International Thermonuclear Experimental Reactor, or ITER, a collaborative scientific effort backed by the European Union and six other nations, including the United States. Once it's built in southern France, ITER will be largest fusion reactor ever. The plans for this project dwarf the three similar U.S. fusion experiments, including the one at Princeton, in both scale and expense.

But ITER is sputtering with delayed construction and ballooning costs, and U.S. physicists are increasingly worried that their work at home, such as the National Spherical Torus Experiment, will be sidelined to fund the international project. They see the domestic research as crucial to understanding the nature of the plasma used in certain fusion reactions -- crucial, even, to getting facilities like ITER built in the first place. Meanwhile, critics view magnetic fusion research as a money-wasting boondoggle that will never be able to produce energy as cheaply as methods like solar and wind power.

After the visit to the facility, Williams returned to his office and I met with his boss, Stewart Prager, the head of the Princeton lab. Sitting in a tidy glass-paneled office overlooking the woods, he recalled an old joke about fusion -- it was "30 years away 30 years ago, and it's 30 years away now" -- and explained why the quip has taken hold.

"The true pioneers in the field didn't fully appreciate how hard a scientific problem it would be," he said.

But then he added: "Even having said that, if you look back at documents from the past, they laid out how much it would cost. That amount of money was never nearly delivered."

Last fall, construction workers hustled to finish an upgrade to yet another magnet, this one jutting through the center of the sphere like a Roman column. On a recent November afternoon, Michael Williams, the lab's head of engineering, weaved his way through workers and up a stainless steel scaffolding to get a better view.

"Fusion is an expensive science, because you're trying to build a sun in a bottle," Williams said.

This endeavor in the New Jersey woods, known as the National Spherical Torus Experiment, was created to study the physics of plasma, in the hopes that one day humans will be able to harness a new source of energy based on the reactions that power stars. The project has been shut down for two years to undergo an upgrade that will double its power. The improvement costs $94 million, and is paid for -- like the rest of the Princeton Plasma Physics Lab -- by the U.S. Department of Energy.

Impressive as it may appear, this experiment is small compared to what once stood there. Earlier in the day, while walking over to the site from his office, Williams pointed out a sign on the National Spherical Torus Experiment building that read "TFTR." The abbreviation stands for Tokamak Fusion Test Reactor, a bigger, more promising fusion experiment that was scrapped in the mid-1990s.

"I keep telling them to take that down," he said.

The history of the U.S. Department of Energy's magnetic fusion program is littered with half-completed experiments and never-realized ideas. Currently, the most ambitious project in all of fusion work is the International Thermonuclear Experimental Reactor, or ITER, a collaborative scientific effort backed by the European Union and six other nations, including the United States. Once it's built in southern France, ITER will be largest fusion reactor ever. The plans for this project dwarf the three similar U.S. fusion experiments, including the one at Princeton, in both scale and expense.

But ITER is sputtering with delayed construction and ballooning costs, and U.S. physicists are increasingly worried that their work at home, such as the National Spherical Torus Experiment, will be sidelined to fund the international project. They see the domestic research as crucial to understanding the nature of the plasma used in certain fusion reactions -- crucial, even, to getting facilities like ITER built in the first place. Meanwhile, critics view magnetic fusion research as a money-wasting boondoggle that will never be able to produce energy as cheaply as methods like solar and wind power.

After the visit to the facility, Williams returned to his office and I met with his boss, Stewart Prager, the head of the Princeton lab. Sitting in a tidy glass-paneled office overlooking the woods, he recalled an old joke about fusion -- it was "30 years away 30 years ago, and it's 30 years away now" -- and explained why the quip has taken hold.

"The true pioneers in the field didn't fully appreciate how hard a scientific problem it would be," he said.

But then he added: "Even having said that, if you look back at documents from the past, they laid out how much it would cost. That amount of money was never nearly delivered."

Fusion

scientists make an incredible proposition: We can power our cities,

they say, with miniature, vacuum-sealed stars. According to those who

study it, the benefits of fusion power, if it ever came to fruition,

would be enormous. It requires no carbon drawn from the ground. Its fuel

-- hydrogen harvested from seawater -- is inexhaustible. It emits no

gases that warm the planet. And unlike its cousin fission, which is

currently used in nuclear power plants, fusion produces little

radioactive waste, and what it does produce can be recycled by the

reactor.

The only hurdle, as many U.S. physicists tell it, is the billions of dollars needed before the first commercially viable watt of power is produced. Researchers lament the fact that the U.S. hasn't articulated a date for when it hopes to have fusion go online, while China and South Korea have set timetables to put fusion online in the 2040s.

A so-called magnetic confinement fusion reactor would work by spinning a cloud of hydrogen until it reaches several hundred million degrees Celsius -- at which point it would be so hot that no known material could contain it. Instead, high-powered magnets in a vacuum would envelop the ring of hydrogen plasma.

Spun with enough heat and pressure, the positively charged hydrogen atoms, stripped of their electrons, would begin to overcome their usual tendency to stay apart. They would fuse into helium, spitting out an extra neutron. When those neutrons embed into a surrounding blanket of lithium, they would warm it enough to boil water, spin a turbine and make electricity. The long-term goal is to create a self-sustaining reaction that produces more energy than is put in.

The oil shortages of the 1970s kick-started federally funded fusion research. When petroleum-pumping nations in Middle East turned off the spigot in 1973 and then again in 1979, much of the world, including the U.S., was rattled by gas shortages and high prices. With Americans waiting in mile-long lines to fill up their tanks, there was a keen national interest in finding any fuel to replace oil.

The crises prompted Congress and President Jimmy Carter to create the Department of Energy, which immediately began to channel funding into alternative energy programs, including fusion. By the end of the '70s, experimental reactors were being built at the Massachusetts Institute of Technology and at Princeton -- including the latter's Tokamak Fusion Test Reactor, the "TFTR" whose outdated sign Michael Williams now walks past.

Adjusted for inflation, the U.S. was spending over $1 billion per year on magnetic confinement fusion research by 1977, according to Department of Energy figures collected by Fusion Power Associates, a nonprofit that promotes fusion research. But by the time Ronald Reagan was elected president in 1980, gas prices had dropped. Eyeing cuts to government spending, Reagan and his Republican colleagues in Congress tightened funding for research into fusion and other alternative energy sources.

"The Republicans hated the Department of Energy because they were messing around with the private sector energy business," said Steve Dean, a former Department of Energy official who oversaw fusion experiments in the 1970s and now runs Fusion Power Associates.

In 1984, however, as the Cold War thawed, Reagan inked a deal with the Soviet Union, along with Europe and Japan, to fund and build what would become ITER. India, China and South Korea would eventually sign up as well. And even with the downturn in U.S. funding, investments made in the '70s started paying off. In 1994, Princeton's TFTR produced what was then a record-breaking 10 megawatts, enough energy to keep 3,000 homes lit for... well, for nearly a second.

Actually, less than one second of power is a bigger deal than it might at first seem. Fusion research can only advance in baby steps across generations of scientists, say experts. First, their goal is to build a multimillion-dollar reactor capable of sustaining plasma for a second. Then, perhaps within a decade of achieving that, their goal is to construct yet another reactor that keeps the plasma going for a minute. It's all part of a painstaking march toward creating a self-sustaining reaction that lasts indefinitely.

"One would have expected these ground breaking results to lead to an upsurge on fusion funding in the U.S.," said Dale Meade, the former deputy director of the Princeton lab, in an email to HuffPost. "It didn't."

Several months later, in Washington, D.C., then-Rep. Newt Gingrich (R-Ga.) gaveled in his first session as Speaker of the House. The GOP-led Congress soon slashed spending yet again in order to balance the federal budget.

"It was a lot of people losing their jobs and being knocked out of the field," said Raymond Fonck, an experimental fusion physicist at the University of Wisconsin who did some work on TFTR. "Some people left the field out of disgust."

Overnight, funding for magnetic fusion research fell by 33 percent -- some $173 million in today's dollars. Princeton's TFTR was shut down. Plans for a new machine to be built where TFTR stood were postponed indefinitely. (Today, the National Spherical Torus Experiment stands on that site.) And the U.S. pulled out of its agreement to help fund ITER, citing cost concerns -- only to rejoin a few years later.

The magnetic fusion program "never really recovered from that budget cut," said Meade.

The only hurdle, as many U.S. physicists tell it, is the billions of dollars needed before the first commercially viable watt of power is produced. Researchers lament the fact that the U.S. hasn't articulated a date for when it hopes to have fusion go online, while China and South Korea have set timetables to put fusion online in the 2040s.

A so-called magnetic confinement fusion reactor would work by spinning a cloud of hydrogen until it reaches several hundred million degrees Celsius -- at which point it would be so hot that no known material could contain it. Instead, high-powered magnets in a vacuum would envelop the ring of hydrogen plasma.

Spun with enough heat and pressure, the positively charged hydrogen atoms, stripped of their electrons, would begin to overcome their usual tendency to stay apart. They would fuse into helium, spitting out an extra neutron. When those neutrons embed into a surrounding blanket of lithium, they would warm it enough to boil water, spin a turbine and make electricity. The long-term goal is to create a self-sustaining reaction that produces more energy than is put in.

The oil shortages of the 1970s kick-started federally funded fusion research. When petroleum-pumping nations in Middle East turned off the spigot in 1973 and then again in 1979, much of the world, including the U.S., was rattled by gas shortages and high prices. With Americans waiting in mile-long lines to fill up their tanks, there was a keen national interest in finding any fuel to replace oil.

The crises prompted Congress and President Jimmy Carter to create the Department of Energy, which immediately began to channel funding into alternative energy programs, including fusion. By the end of the '70s, experimental reactors were being built at the Massachusetts Institute of Technology and at Princeton -- including the latter's Tokamak Fusion Test Reactor, the "TFTR" whose outdated sign Michael Williams now walks past.

Adjusted for inflation, the U.S. was spending over $1 billion per year on magnetic confinement fusion research by 1977, according to Department of Energy figures collected by Fusion Power Associates, a nonprofit that promotes fusion research. But by the time Ronald Reagan was elected president in 1980, gas prices had dropped. Eyeing cuts to government spending, Reagan and his Republican colleagues in Congress tightened funding for research into fusion and other alternative energy sources.

"The Republicans hated the Department of Energy because they were messing around with the private sector energy business," said Steve Dean, a former Department of Energy official who oversaw fusion experiments in the 1970s and now runs Fusion Power Associates.

In 1984, however, as the Cold War thawed, Reagan inked a deal with the Soviet Union, along with Europe and Japan, to fund and build what would become ITER. India, China and South Korea would eventually sign up as well. And even with the downturn in U.S. funding, investments made in the '70s started paying off. In 1994, Princeton's TFTR produced what was then a record-breaking 10 megawatts, enough energy to keep 3,000 homes lit for... well, for nearly a second.

Actually, less than one second of power is a bigger deal than it might at first seem. Fusion research can only advance in baby steps across generations of scientists, say experts. First, their goal is to build a multimillion-dollar reactor capable of sustaining plasma for a second. Then, perhaps within a decade of achieving that, their goal is to construct yet another reactor that keeps the plasma going for a minute. It's all part of a painstaking march toward creating a self-sustaining reaction that lasts indefinitely.

"One would have expected these ground breaking results to lead to an upsurge on fusion funding in the U.S.," said Dale Meade, the former deputy director of the Princeton lab, in an email to HuffPost. "It didn't."

Several months later, in Washington, D.C., then-Rep. Newt Gingrich (R-Ga.) gaveled in his first session as Speaker of the House. The GOP-led Congress soon slashed spending yet again in order to balance the federal budget.

"It was a lot of people losing their jobs and being knocked out of the field," said Raymond Fonck, an experimental fusion physicist at the University of Wisconsin who did some work on TFTR. "Some people left the field out of disgust."

Overnight, funding for magnetic fusion research fell by 33 percent -- some $173 million in today's dollars. Princeton's TFTR was shut down. Plans for a new machine to be built where TFTR stood were postponed indefinitely. (Today, the National Spherical Torus Experiment stands on that site.) And the U.S. pulled out of its agreement to help fund ITER, citing cost concerns -- only to rejoin a few years later.

The magnetic fusion program "never really recovered from that budget cut," said Meade.

With

less money available, the Department of Energy went ahead and funded a

less expensive experiment at Princeton that became the National

Spherical Torus Experiment, which began operating in 1999. All the

while, Europe maintained its magnetic fusion program, and China and

South Korea each started programs of their own. (In fact, designs for

the scrapped U.S. experiment were eventually incorporated into reactors

built in both countries.)

Magnetic fusion researchers received $505 million from the federal government for the 2014 fiscal year -- about half of what they used to get, when adjusted for inflation. About $200 million of that pot went overseas to help build ITER.

And even with the downturn since the 1980s, critics still say the program receives too much funding given that it has yet to build an economically viable reactor.

"The magnetic fusion energy program is one of these programs that gets a steady flow of money like clockwork, although it may not receive on a constant dollar basis what it used to," said Robert Alvarez, a former senior policy adviser for the Energy Department who now works at the Institute for Policy Studies, a Washington think tank. "But it still commands a great deal of money in the energy research and development portfolio. You've got to ask yourself: When is it time to fish or cut bait on this?"

Alvarez and other skeptics believe that magnetic fusion will never be inexpensive enough to compete with other sustainable energy sources, because new fusion reactors require billions of dollars to build and decades to complete.

"At $10 to $20 billion a pop, it just doesn't lend itself to innovation like wind or solar," said Thomas Cochran, a consultant with the Natural Resources Defense Council. He added that the "people who are closest to the technology" are unable to see the dead ends.

While money for magnetic fusion was cut during the '90s, funding for an alternate form of fusion, called inertial confinement fusion, took a dramatic leap. After the U.S. signed a treaty banning nuclear weapons testing, Congress paid for the construction of the world's largest laser 40 miles east of San Francisco, designed to compress a pellet of hydrogen with enough heat and pressure for its atoms to fuse into helium.

The laser-based approach to fusion is meant to offer both a potential new energy source and a way to develop hydrogen bombs without actually blowing anything up. Its early years, however, have not been entirely trouble-free. After falling five years behind schedule, going three times over budget and failing to achieve its 2012 goal of producing a self-sustaining reaction, the laser was labeled a fiasco by critics. Finally, though, the lab was able to produce its first significant fusion reaction in 2013.

Magnetic fusion researchers received $505 million from the federal government for the 2014 fiscal year -- about half of what they used to get, when adjusted for inflation. About $200 million of that pot went overseas to help build ITER.

And even with the downturn since the 1980s, critics still say the program receives too much funding given that it has yet to build an economically viable reactor.

"The magnetic fusion energy program is one of these programs that gets a steady flow of money like clockwork, although it may not receive on a constant dollar basis what it used to," said Robert Alvarez, a former senior policy adviser for the Energy Department who now works at the Institute for Policy Studies, a Washington think tank. "But it still commands a great deal of money in the energy research and development portfolio. You've got to ask yourself: When is it time to fish or cut bait on this?"

Alvarez and other skeptics believe that magnetic fusion will never be inexpensive enough to compete with other sustainable energy sources, because new fusion reactors require billions of dollars to build and decades to complete.

"At $10 to $20 billion a pop, it just doesn't lend itself to innovation like wind or solar," said Thomas Cochran, a consultant with the Natural Resources Defense Council. He added that the "people who are closest to the technology" are unable to see the dead ends.

While money for magnetic fusion was cut during the '90s, funding for an alternate form of fusion, called inertial confinement fusion, took a dramatic leap. After the U.S. signed a treaty banning nuclear weapons testing, Congress paid for the construction of the world's largest laser 40 miles east of San Francisco, designed to compress a pellet of hydrogen with enough heat and pressure for its atoms to fuse into helium.

The laser-based approach to fusion is meant to offer both a potential new energy source and a way to develop hydrogen bombs without actually blowing anything up. Its early years, however, have not been entirely trouble-free. After falling five years behind schedule, going three times over budget and failing to achieve its 2012 goal of producing a self-sustaining reaction, the laser was labeled a fiasco by critics. Finally, though, the lab was able to produce its first significant fusion reaction in 2013.

Although

magnetic fusion is further along, the stumbles of inertial confinement

may have given all of fusion research a black eye. With magnetic fusion,

the Department of Energy is under yet another budgetary constraint

today. Eleven years behind schedule and crippled by decentralized

management, ITER is becoming increasingly expensive. The U.S. is

obligated to fund about 9 percent of the project, and what was once a $1

billion commitment is swelling beyond the $4 billion mark.

With Congress gridlocked, the money must come from within the department. In October, a Department of Energy advisory committee floated the idea of shutting down the fusion reactor at MIT, and, if necessary, shutting down one of the two other experimental reactors in the U.S. (the one at Princeton or another at General Atomics in San Diego). Even though the resolution was non-binding, the decision drew the ire of many fusion physicists. Fifty experts signed an emphatic letter to the department saying that the "underlying strategic vision that guides this report is flawed."

"The DOE is committed to creating opportunities for its fusion researchers to assert strong leadership in the next decade and beyond," said Ed Synakowski, the associate director at the Department of Energy who oversees fusion funding. He said that while the department has proposed closing the MIT lab, it would close one of the other two reactors only under dire budgetary conditions.

Earlier this year, the Obama administration slated the reactor at MIT for closure. An aggressive lobbying effort by Massachusetts politicians was the only thing that kept it open.

The possible closures have put the fusion community on edge. But what some find more worrying is the idea that the young scientific minds needed to tackle this multi-generational problem will instead look for careers in disciplines that are better funded and more stable.

"The older generation," said Fonck, "we get concerned that the younger generation will say, 'Well, there's no jobs in this field.'"

With Congress gridlocked, the money must come from within the department. In October, a Department of Energy advisory committee floated the idea of shutting down the fusion reactor at MIT, and, if necessary, shutting down one of the two other experimental reactors in the U.S. (the one at Princeton or another at General Atomics in San Diego). Even though the resolution was non-binding, the decision drew the ire of many fusion physicists. Fifty experts signed an emphatic letter to the department saying that the "underlying strategic vision that guides this report is flawed."

"The DOE is committed to creating opportunities for its fusion researchers to assert strong leadership in the next decade and beyond," said Ed Synakowski, the associate director at the Department of Energy who oversees fusion funding. He said that while the department has proposed closing the MIT lab, it would close one of the other two reactors only under dire budgetary conditions.

Earlier this year, the Obama administration slated the reactor at MIT for closure. An aggressive lobbying effort by Massachusetts politicians was the only thing that kept it open.

The possible closures have put the fusion community on edge. But what some find more worrying is the idea that the young scientific minds needed to tackle this multi-generational problem will instead look for careers in disciplines that are better funded and more stable.

"The older generation," said Fonck, "we get concerned that the younger generation will say, 'Well, there's no jobs in this field.'"

PROTO-SPHERA

Proponents: F. Alladio, A. Mancuso, P. Micozzi, L. Pieroni

Spherical tokamak

From Wikipedia, the free encyclopedia

- Not to be confused with the spheromak, another topic in fusion research.

The spherical tokamak is an offshoot of the conventional tokamak design. Proponents claim that it has a number of substantial practical advantages over these devices. For this reason the ST has generated considerable interest since the late 1980s. However, development remains effectively one generation behind efforts like JET. Major experiments in the field include the pioneering START and MAST at Culham in the UK, the US's NSTX and Russian Globus-M.

Research has questioned whether spherical tokamaks are a route to lower cost reactors. Further research is needed to better understand how such devices scale. Even in the event that STs do not lead to lower cost approaches to power generation, they are still lower cost in general; this makes them attractive devices for plasma physics, or as high-energy neutron sources.

Contents

[hide]History[edit]

Aspect ratio[edit]

Fusion reactor efficiency is based on the amount of power released from fusion reactions compared with the power needed to keep the plasma hot. This can be calculated from three key measures; the temperature of the plasma, its density, and the length of time the reaction is maintained. The product of these three measures is the "fusion triple product", and in order to be economic it must reach the Lawson criterion, ≥3 • 1021 keV • seconds / m³.[1][2]Tokamaks are the leading approach within the larger group of magnetic fusion energy (MFE) designs, the grouping of systems that attempt to confine a plasma using powerful magnetic fields. In the MFE approach, it is the time axis that is considered most important for ongoing development. Tokamaks confine their fuel at low pressure (around 1/millionth of atmospheric) but high temperatures (150 million Celsius), and attempt to keep those conditions stable for increasing times on the order of seconds to minutes.[3]

A key measure of MFE reactor economics is "beta", β, the ratio of plasma pressure to the magnetic pressure.[4][5] Improving beta means that you need to use, in relative terms, less energy to generate the magnetic fields for any given plasma pressure (or density). The price of magnets scales roughly with β½, so reactors operating at higher betas are less expensive for any given level of confinement. Tokamaks operate at relatively low betas, a few %, and generally require superconducting magnets in order to have enough field strength to reach useful densities.

The limiting factor in reducing beta is the size of the magnets. Tokamaks use a series of ring-shaped magnets around the confinement area, and their physical dimensions mean that the hole in the middle of the torus can be reduced only so much before the magnet windings are touching. This limits the aspect ratio, A, of the reactor to about 2.5; the diameter of the reactor as a whole could be about 2.5 times the cross-sectional diameter of the confinement area. Some experimental designs were slightly under this limit, while many reactors had much higher A.

Reducing A[edit]

During the 1980s, researchers at Oak Ridge National Laboratory (ORNL), led by Ben Carreras and Tim Hender, were studying the operations of tokamaks as A was reduced. They noticed, based on magnetohydrodynamic considerations, that tokamaks were inherently more stable at low aspect ratios. In particular, the classic "kink instability" was strongly suppressed. Other groups expanded on this body of theory, and found that the same was true for the high-order ballooning instability as well. This suggested that a low-A machine would not only be less expensive to build, but have better performance as well.[6]One way to reduce the size of the magnets is to re-arrange them around the confinement area. This was the idea behind the "compact tokamak" designs, typified by the Alcator C-Mod, Riggatron and IGNITOR. The later two of these designs place the magnets inside the confinement area, so the toroidal vacuum vessel can be replaced with a cylinder. The decreased distance between the magnets and plasma leads to much higher betas, so conventional (non-superconducting) magnets could be used.[7] The downside to this approach, one that was widely criticized, is that it places the magnets directly in the high-energy neutron flux of the fusion reactions.[8] In operation the magnets would be rapidly eroded, requiring the vacuum vessel to be opened and the entire magnet assembly replaced after a month or so of operation.

Around the same time, several advances in plasma physics were making their way through the fusion community. Of particular importance were the concepts of elongation and triangularity, referring to the cross-sectional shape of the plasma. Early tokamaks had all used circular cross-sections simply because that was the easiest to model and build, but over time it became clear that C or (more commonly) D-shaped plasma cross-sections led to higher performance. This produces plasmas with high "shear", which distributed and broke up turbulent eddies in the plasma.[6] These changes led to the "advanced tokamak" designs, which include ITER.[9]

Spherical tokamaks[edit]

In 1984,[10] Martin Peng of ORNL proposed an alternate arrangement of the magnet coils that would greatly reduce the aspect ratio while avoiding the erosion issues of the compact tokamak. Instead of wiring each magnet coil separately, he proposed using a single large conductor in the center, and wiring the magnets as half-rings off of this conductor. What was once a series of individual rings passing through the hole in the center of the reactor was reduced to a single post, allowing for aspect ratios as low as 1.2.[5][11] This means that ST's can reach the same operational triple product numbers as conventional designs using one tenth the magnetic field.The design, naturally, also included the advances in plasma shaping that were being studied concurrently. Like all modern designs, the ST uses a D-shaped plasma cross section. If you consider a D on the right side and a reversed D on the left, as the two approach each other (as A is reduced) eventually the vertical surfaces touch and the resulting shape is a circle. In 3D, the outer surface is roughly spherical. They named this layout the "spherical tokamak", or ST. These studies suggested that the ST layout would include all the qualities of the advanced tokamak, the compact tokamak, would strongly suppress several forms of turbulence, reach high β, have high self-magnetism and be less costly to build.[12]

The ST concept appeared to represent an enormous advance in tokamak design. However, it was being proposed during a period when US fusion research budgets were being dramatically scaled back. ORNL was provided with funds to develop a suitable central column built out of a high-strength copper alloy called "Glidcop".[13] However, they were unable to secure funding to build a demonstration machine, "STX".[12][14]

From spheromak to ST[edit]

Failing to build an ST at ORNL, Peng began a worldwide effort to interest other teams in the ST concept and get a test machine built. One way to do this quickly would be to convert a spheromak machine to the ST layout.[11]Spheromaks are essentially "smoke rings" of plasma that are internally self-stable. They can, however, drift about within their confinement area. The typical solution to this problem was to wrap the area in a sheet of copper, or more rarely, place a copper conductor down the center. When the spheromak approaches the conductor, a magnetic field is generated that pushes it away again. A number of experimental spheromak machines were built in the 1970s and early 80s, but demonstrated performance that simply was not interesting enough to suggest further development.

Machines with the central conductor had a strong mechanical resemblance to the ST design, and could be converted with relative ease. The first such conversion was made to the Heidelberg Spheromak Experiment, or HSE. Built at Heidelberg University in the early 1980s, HSE was quickly converted to a ST in 1987 by adding new magnets to the outside of the confinement area and attaching them to its central conductor.[15] Although the new configuration only operated "cold", far below fusion temperatures, the results were promising and demonstrated all of the basic features of the ST.

Several other groups with spheromak machines made similar conversions, notably the rotamak at the Australian Nuclear Science and Technology Organisation and the SPHEX machine.[16] In general they all found an increase in performance of a factor of two or more. This was an enormous advance, and the need for a purpose-built machine became pressing.

START and newer systems[edit]

Peng's advocacy also caught the interest of Derek Robinson, of the United Kingdom Atomic Energy Authority (UKAEA) fusion center at Culham.[17] What is today known as the Culham Centre for Fusion Energy was set up in the 1960s to gather together all of the UK's fusion research, formerly spread across several sites, and Robinson had recently been promoted to running several projects at the site.Robinson was able to gather together a team and secure funding on the order of 100,000 pounds to build an experimental machine, the Small Tight Aspect Ratio Tokamak, or START. Several parts of the machine were recycled from earlier projects, while others were loaned from other labs, including a 40 keV neutral beam injector from ORNL.[18] Before it started operation there was considerable uncertainty about its performance, and predictions that the project would be shut down if confinement proved to be similar to spheromaks.

Construction of START began in 1990, it was assembled rapidly and started operation in January 1991.[14] Its earliest operations quickly put any theoretical concerns to rest. Using ohmic heating alone, START demonstrated betas as high as 12%, almost matching the record of 12.6% on the DIII-D machine.[11][19] The results were so good that an additional 10 million pounds of funding was provided over time, leading to a major re-build in 1995. When neutral beam heating was turned on, beta jumped to 40%, beating any conventional design by 3 times.[19]

Additionally, START demonstrated excellent plasma stability. A practical rule of thumb in conventional designs is that as the operational beta approaches a certain value normalized for the machine size, ballooning instability destabilizes the plasma. This so-called "Troyon limit" is normally 4, and generally limited to about 3.5 in real world machines. START improved this dramatically to 6. The limit depends on size of the machine, and indicates that machines will have to be built of at least a certain size if they wish to reach some performance goal. With START's much higher scaling, the same limits would be reached with a smaller machine.[20]

Rush to build STs[edit]

START proved Peng and Strickler's predictions; the ST had performance an order of magnitude better than conventional designs, and cost much less to build as well. In terms of overall economics, the ST was an enormous step forward.Moreover, the ST was a new approach, and a low-cost one. It was one of the few areas of mainline fusion research where real contributions could be made on small budgets. This sparked off a series of ST developments around the world. In particular, the National Spherical Torus Experiment (NSTX) and Pegasus experiments in the US, Globus-M in Russia, and the UK's follow-on to START, MAST. START itself found new life as part of the Proto-Sphera project in Italy, where experimenters are attempting to eliminate the central column by passing the current through a secondary plasma.[21]

Design[edit]

Tokamak reactors consist of a toroidal vacuum tube surrounded by a series of magnets. One set of magnets is logically wired in a series of rings around the outside of the tube, but are physically connected through a common conductor in the center. The central column is also normally used to house the solenoid that forms the inductive loop for the ohmic heating system (and pinch current).The canonical example of the design can be seen in the small tabletop ST device made at Flinders University,[22] which uses a central column made of copper wire wound into a solenoid, return bars for the toroidal field made of vertical copper wires, and a metal ring connecting the two and providing mechanical support to the structure.

Stability within the ST[edit]

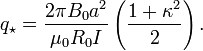

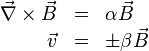

Advances in plasma physics in the 1970s and 80s led to a much stronger understanding of stability issues, and this developed into a series of "scaling laws" that can be used to quickly determine rough operational numbers across a wide variety of systems. In particular, Troyon's work on the critical beta of a reactor design is considered one of the great advances in modern plasma physics. Troyon's work provides a beta limit where operational reactors will start to see significant instabilities, and demonstrates how this limit scales with size, layout, magnetic field and current in the plasma.However, Troyon's work did not consider extreme aspect ratios, work that was later carried out by a group at the Princeton Plasma Physics Laboratory.[23] This starts with a development of a useful beta for a highly asymmetric volume:

is the volume averaged magnetic field

is the volume averaged magnetic field  (as opposed to Troyon's use of the field in the vacuum outside the plasma,

(as opposed to Troyon's use of the field in the vacuum outside the plasma,  ). Following Freidberg,[24] this beta is then fed into a modified version of the safety factor:

). Following Freidberg,[24] this beta is then fed into a modified version of the safety factor: is the vacuum magnetic field, a is the minor radius,

is the vacuum magnetic field, a is the minor radius,  the major radius,

the major radius,  the plasma current, and

the plasma current, and  the elongation. In this definition it should be clear that decreasing aspect ratio,

the elongation. In this definition it should be clear that decreasing aspect ratio,  leads to higher average safety factors. These definitions allowed the Princeton group to develop a more flexible version of Troyon's critical beta:

leads to higher average safety factors. These definitions allowed the Princeton group to develop a more flexible version of Troyon's critical beta: is the inverse aspect ratio

is the inverse aspect ratio  and

and  is a constant scaling factor that is about 0.03 for any

is a constant scaling factor that is about 0.03 for any  greater than 2. Note that the critical beta scales with aspect ratio, although not directly, because

greater than 2. Note that the critical beta scales with aspect ratio, although not directly, because  also includes aspect ratio factors. Numerically, it can be shown that

also includes aspect ratio factors. Numerically, it can be shown that  is maximized for:

is maximized for: of 2 and an aspect ratio of 1.25:

of 2 and an aspect ratio of 1.25: with aspect ratio is evident.

with aspect ratio is evident.Power scaling[edit]

Beta is an important measure of performance, but in the case of a reactor designed to produce electricity, there are other practical issues that have to be considered. Among these is the power density, which offers an estimate of the size of the machine needed for a given power output. This is, in turn, a function of the plasma pressure, which is in turn a function of beta. At first glance it might seem that the ST's higher betas would naturally lead to higher allowable pressures, and thus higher power density. However, this is only true if the magnetic field remains the same – beta is the ratio of magnetic to plasma density.If one imagines a toroidal confinement area wrapped with ring-shaped magnets, it is clear that the magnetic field is greater on the inside radius than the outside - this is the basic stability problem that the tokamak's electrical current addresses. However, the difference in that field is a function of aspect ratio; an infinitely large toroid would approximate a straight solenoid, while an ST maximizes the difference in field strength. Moreover, as there are certain aspects of reactor design that are fixed in size, the aspect ratio might be forced into certain configurations. For instance, production reactors would use a thick "blanket" containing lithium around the reactor core in order to capture the high-energy neutrons being released, both to protect the rest of the reactor mass from these neutrons as well as produce tritium for fuel. The size of the blanket is a function of the neutron's energy, which is 14 MeV in the D-T reaction regardless of the reactor design, Thus the blanket would be the same for a ST or traditional design, about a meter across.

In this case further consideration of the overall magnetic field is needed when considering the betas. Working inward through the reactor volume toward the inner surface of the plasma we would encounter the blanket, "first wall" and several empty spaces. As we move away from the magnet, the field reduces in a roughly linear fashion. If we consider these reactor components as a group, we can calculate the magnetic field that remains on the far side of the blanket, at the inner face of the plasma:

as a general principle, one can eliminate the blanket on the inside face and leave the central column open to the neutrons. In this case,

as a general principle, one can eliminate the blanket on the inside face and leave the central column open to the neutrons. In this case,  is zero. Considering a central column made of copper, we can fix the maximum field generated in the coil,

is zero. Considering a central column made of copper, we can fix the maximum field generated in the coil,  to about 7.5 T. Using the ideal numbers from the section above:

to about 7.5 T. Using the ideal numbers from the section above: of 15 T, and a blanket of 1.2 meters thickness. First we calculate

of 15 T, and a blanket of 1.2 meters thickness. First we calculate  to be 1/(5/2) = 0.4 and

to be 1/(5/2) = 0.4 and  to be 1.5/5 = 0.24, then:

to be 1.5/5 = 0.24, then:Advantages[edit]

ST's have two major advantages over conventional designs.The first is practical. Using the ST layout places the toroidal magnets much closer to the plasma, on average. This greatly reduces the amount of energy needed to power the magnets in order to reach any particular level of magnetic field within the plasma. Smaller magnets cost less, reducing the cost of the reactor. The gains are so great that superconducting magnets may not be required, leading to even greater cost reductions. START placed the secondary magnets inside the vacuum chamber, but in modern machines these have been moved outside and can be superconducting.[26]

The other advantages have to do with the stability of the plasma. Since the earliest days of fusion research, the problem in making a useful system has been a number of plasma instabilities that only appeared as the operating conditions moved ever closer to useful ones for fusion power. In 1954 Edward Teller hosted a meeting exploring some of these issues, and noted that he felt plasmas would be inherently more stable if they were following convex lines of magnetic force, rather than concave.[27] It was not clear at the time if this manifested itself in the real world, but over time the wisdom of these words become apparent.

In the tokamak, stellarator and most pinch devices, the plasma is forced to follow helical magnetic lines. This alternately moves the plasma from the outside of the confinement area to the inside. While on the outside, the particles are being pushed inward, following a concave line. As they move to the inside they are being pushed outward, following a convex line. Thus, following Teller's reasoning, the plasma is inherently more stable on the inside section of the reactor. In practice the actual limits are suggested by the "safety factor", q, which vary over the volume of the plasma.[28]

In a traditional circular cross-section tokamak, the plasma spends about the same time on the inside and the outside of the torus; slightly less on the inside because of the shorter radius. In the advanced tokamak with a D-shaped plasma, the inside surface of the plasma is significantly enlarged and the particles spend more time there. However, in a normal high-A design, q varies only slightly as the particle moves about, as the relative distance from inside the outside is small compared to the radius of the machine as a whole (the definition of aspect ratio). In an ST machine, the variance from "inside" to "outside" is much larger in relative terms, and the particles spend much more of their time on the "inside". This leads to greatly improved stability.[25]

It is possible to build a traditional tokamak that operates at higher betas, through the use of more powerful magnets. To do this, the current in the plasma must be increased in order to generate the toroidal magnetic field of the right magnitude. This drives the plasma ever closer to the Troyon limits where instabilities set in. The ST design, through its mechanical arrangement, has much better q and thus allows for much more magnetic power before the instabilities appear. Conventional designs hit the Troyon limit around 3.5, whereas START demonstrated operation at 6.[19]

Disadvantages[edit]

The ST has three distinct disadvantages compared to "conventional" advanced tokamaks with higher aspect ratios.The first issue is that the overall pressure of the plasma in an ST is lower than conventional designs, in spite of higher beta. This is due to the limits of the magnetic field on the inside of the plasma,

This limit is theoretically the same in the ST and conventional designs, but as the ST has a much lower aspect ratio, the effective field changes more dramatically over the plasma volume.[29]

This limit is theoretically the same in the ST and conventional designs, but as the ST has a much lower aspect ratio, the effective field changes more dramatically over the plasma volume.[29]The second issue is both an advantage and disadvantage. The ST is so small, at least in the center, that there is little or no room for superconducting magnets. This is not a deal-breaker for the design, as the fields from conventional copper wound magnets is enough for the ST design. However, this means that power dissipation in the central column will be considerable. Engineering studies suggest that the maximum field possible will be about 7.5 T, much lower than is possible with a conventional layout. This places a further limit on the allowable plasma pressures.[29] However, the lack of superconducting magnets greatly lowers the price of the system, potentially offsetting this issue economically.

The lack of shielding also means the magnet is directly exposed to the interior of the reactor. It is subject to the full heating flux of the plasma, and the neutrons generated by the fusion reactions. In practice, this means that the column would have to be replaced fairly often, likely on the order of a year, greatly affecting the availability of the reactor.[30] In production settings, the availability is directly related to the cost of electrical production. Experiments are underway to see if the conductor can be replaced by a z-pinch plasma[31] or liquid metal conductor[32] in its place.

Finally, the highly asymmetrical plasma cross sections and tightly wound magnetic fields require very high toroidal currents to maintain. Normally this would require large amounts of secondary heating systems, like neutral beam injection. These are energetically expensive, so the ST design relies on high bootstrap currents for economical operation.[29] Luckily, high elongation and triangularity are the features that give rise to these currents, so it is possible that the ST will actually be more economical in this regard.[33] This is an area of active research.

List of operational ST machines[edit]

- MAST, Culham Science Center, United Kingdom

- Globus-M, Ioffe Institute, Russia

- NSTX, Princeton Plasma Physics Laboratory, United States

- Proto-Sphera (formerly START), ENEA, Italy

- TST-2, University of Tokyo, Japan

- SUNIST, Tsinghua University, China

- PEGASUS, University of Wisconsin-Madison, United States

Dynomak

From Wikipedia, the free encyclopedia

Dynomak is a spheromak[1] fusion reactor concept developed by the University of Washington using U.S. Department of Energy funding.[2] [3]

The project started as a class project taught by UW professor Thomas Jarboe; after the end of the class, the design was continued by Jarboe and PhD student Derek Sutherland, who had been previously working on reactor design at MIT.[2]

Unlike other fusion reactor designs (such as the ITER currently under construction in France), the Dynomak would be, according to its engineering team, comparable in costs to a conventional coal plant.[2]

The prototype currently at UW, about a tenth the scale of a commercial project, is able to sustain sustain plasma efficiently. Higher output would require the scaling up of the project and a higher plasma temperature.[2]

A cost analysis was published in April 2014 and detailed results would be presented at the International Atomic Energy Agency's Fusion Energy Conference on October 17, 2014.[2]

Current results as of the Innovative Confinement Concepts Workshop in 2014 show performance of the HIT-SI high Beta spheromak operating at plasma densities of 5x1019 m-3, temperatures of 60 eV, and maximum operation time of 1.5 ms.[citation needed] No confinement time results are given. At these temperatures no fusion reactions, sustainment, alpha heating, or neutron production is expected.

The project started as a class project taught by UW professor Thomas Jarboe; after the end of the class, the design was continued by Jarboe and PhD student Derek Sutherland, who had been previously working on reactor design at MIT.[2]

Unlike other fusion reactor designs (such as the ITER currently under construction in France), the Dynomak would be, according to its engineering team, comparable in costs to a conventional coal plant.[2]

The prototype currently at UW, about a tenth the scale of a commercial project, is able to sustain sustain plasma efficiently. Higher output would require the scaling up of the project and a higher plasma temperature.[2]

A cost analysis was published in April 2014 and detailed results would be presented at the International Atomic Energy Agency's Fusion Energy Conference on October 17, 2014.[2]

Current results as of the Innovative Confinement Concepts Workshop in 2014 show performance of the HIT-SI high Beta spheromak operating at plasma densities of 5x1019 m-3, temperatures of 60 eV, and maximum operation time of 1.5 ms.[citation needed] No confinement time results are given. At these temperatures no fusion reactions, sustainment, alpha heating, or neutron production is expected.

Spheromak

From Wikipedia, the free encyclopedia

- Not to be confused with the spherical tokamak, another topic in fusion research.

The physics of the spheromak and their collisions is similar to a variety of astrophysical events, like coronal loops and filaments, relativistic jets and plasmoids. They are particularly useful for studying magnetic reconnection events, when two or more spheromaks collide. Spheromaks are easy to generate using a "gun" that ejects spheromaks off the end of an electrode into a holding area, called the flux conserver. This has made them useful in the laboratory setting, and spheromak guns are relatively common in astrophysics labs. These devices are often, confusingly, referred to simply as "spheromaks" as well; the term has two meanings.

Spheromaks have been proposed as a magnetic fusion energy concept due to their long confinement times, which was on the same order as the best tokamaks when they were first studied. Although they had some successes during the 1970s and 80s, these small and lower-energy devices had limited performance and most spheromak research ended when fusion funding was dramatically curtailed in the late 1980s. However, in the late 1990s research demonstrated that hotter spheromaks have better confinement times, and this led to a second wave of spheromak machines. Spheromaks have also been used to inject plasma into a bigger magnetic confinement experiment like a tokamak.[2]

Contents

[hide]History

The spheromak has undergone several distinct periods of investigation, with the greatest efforts during the 1980s, and a reemergence in the 2000s.Background work in astrophysics

A key concept in the understanding of the spheromak is magnetic helicity, a value that describes the "twistedness" of the magnetic field in a plasma.

that describes the "twistedness" of the magnetic field in a plasma.The earliest work on these concepts was developed by Hannes Alfvén in 1943,[3] which won him the 1970 Nobel Prize in Physics. His development of the concept of Alfvén waves explained the long-duration dynamics of plasma as electric currents traveling within them produced magnetic fields which, in a fashion similar to a dynamo, gave rise to new currents. In 1950, Lundquist experimentally studied Alfvén waves in mercury and introduced the characterizing Lundquist number, which describes the plasma's conductivity. In 1958, Woltjer, working on astrophysical plasmas, noted that

is conserved, which implies that a twisty field will attempt to maintain its twistyness even with external forces being applied to it.[4]

is conserved, which implies that a twisty field will attempt to maintain its twistyness even with external forces being applied to it.[4]Starting in 1959, Alfvén and a team including Lindberg, Mitlid and Jacobsen built a device to create balls of plasma for study. This device was identical to modern "coaxial injector" devices (see below) and the experimenters were surprised to find a number of interesting behaviors. Among these was the creation of stable rings of plasma. In spite of their many successes, in 1964 the researchers turned to other areas and the injector concept lay dormant for two decades.[5]

Background work in fusion[

In 1951 efforts to produce controlled fusion for power production began. These experiments generally used some sort of pulsed power to deliver the large magnetic forces required in the experiments. The current magnitudes and the resulting forces were unprecedented. In 1957 Harold Furth, Levine and Waniek reported on the dynamics of large magnets, demonstrating that the limiting factor in magnet performance was physical; stresses in the magnet would overcome its own mechanical limits. They proposed winding these magnets in such a way that the forces within the magnet windings cancelled out, the "force-free condition". Although it was not known at the time, this is the same magnetic field as in a spheromak.[6]In 1957 the ZETA machine started operation in the UK. ZETA was at that time by far the largest and most powerful fusion device in the world. It operated until 1968, by which time many devices matched its size. During its operation, the experimental team noticed that on occasion the plasma would maintain confinement long after the experimental run had ostensibly ended, although this was not then studied in depth. Years later in 1974, John Bryan Taylor characterized these self-stable plasmas, which he called "quiescent". He developed the Taylor state equilibrium concept, a plasma state that conserves helicity in its lowest possible energy state. This led to a re-awakening of compact torus research.[7]

In the aftermath of ZETA both the "classical" z-pinch concept and the newer theta-pinch lost support. While working on such a machine in the early 1960s, one designed with a conical pinch area, Bostick and Wells found that the machine sometimes created stable rings of plasma.[8] A series of machines to study the phenomenon followed. One magnetic probe measurement found the toroidal magnetic field profile of a spheromak; the toroidal field was zero on axis, rose to a maximum at some interior point, and then went to zero at the wall.[7] However, the theta-pinch failed to reach the high-energy conditions needed for fusion. Most work on theta-pinch had ended by the 1970s.

The golden age

The key concept in magnetic fusion energy (MFE) is the Lawson criterion, a combination of the plasma temperature, density and confinement time.[9] Fusion devices generally fell into two classes, pulsed machines like the z-pinch that attempted to reach high densities and temperatures but only for microseconds, while steady state concepts such as the stellarator and magnetic mirror attempted to reach the Lawson criterion through longer confinement times.Taylor's work suggested that self-stable plasmas would be a simple way to approach the problem along the confinement time axis. This sparked a new round of theoretical developments. In 1979 Rosenbluth and Bussac published a paper describing generalizations of Taylor's work, including a spherical minimum energy state having zero toroidal field on the bounding surface.[10] This means that there is no externally driven current on the device axis and so there are no external toroidal field coils. It appeared that this approach would allow for fusion reactors of greatly simpler design than the predominant stellarator and tokamak approaches.

Several experimental devices emerged almost overnight. Wells recognized his earlier experiments as examples of these plasmas. He had moved to the University of Miami and started gathering funding for a device combining two of his earlier conical theta-pinch systems, which became Trisops. In Japan, Nihon University built the PS-1, which used a combination of theta and zeta pinches to produce spheromaks. Harold Furth was excited by the prospect of a less-expensive solution to the confinement issue, and started the S1 at the Princeton Plasma Physics Laboratory, which used inductive heating. Many of these early experiments were summarized by Furth in 1983.[11]

These early MFE experiments culminated in the Compact Torus Experiment (CTX) at Los Alamos. This was this era's largest and most powerful device, generating spheromaks with surface currents of 1 MA, temperatures of 100 eV, and peak electron betas over 20%.[12] CTX experimented with methods to re-introduce energy into the fully formed spheromak in order to counter losses at the surface. In spite of these early successes, by the late 1980s the tokamak had surpassed the confinement times of the spheromaks by orders of magnitude. For example JET was achieving confinement times on the order of 1 second.[13]

The major event that ended most spheromak work was not technical; funding for the entire US fusion program was dramatically curtailed in FY86, and many of the "alternate approaches", which included spheromaks, were defunded. Existing experiments in the US continued until their funding ran out, while smaller programs elsewhere, notably in Japan and the new SPHEX machine in the UK, continued from 1979-1997. CTX gained additional funding from the Defence Department and continued experiments until 1990; the last runs improved temperatures to 400 eV,[14] and confinement times on the order of 3 ms.[15]

Astrophysics

Through the early 1990s spheromak work was widely used by the astrophysics community to explain various events and the spheromak was studied as an add-on to existing MFE devices.D.M. Rust and A. Kumar were particularly active in using magnetic helicity and relaxation to study solar prominences.[16] Similar work was carried out at Caltech by Bellan and Hansen at Caltech,[17] and the Swarthmore Spheromak Experiment (SSX) project at Swarthmore College.

Fusion accessory

Some MFE work continued through this period, almost all of it using spheromaks as accessory devices for other reactors. Caltech and INRS-EMT in Canada both used accelerated spheromaks as a way to refuel tokamaks.[18] Others studied the use of spheromaks to inject helicity into tokamaks, eventually leading to the Helicity Injected Spherical Torus (HIST) device and similar concepts for a number of existing devices.[19]Defence

Hammer, Hartman et al. showed that spheromaks could be accelerated to extremely high velocities using a railgun, which led to several proposed uses. Among these was the use of such plasmas as "bullets" to fire at incoming warheads with the hope that the associated electrical currents would disrupt their electronics. This led to experiments on the Shiva Star system, although these were cancelled in the mid-1990s.[20][21]Other domains

Other proposed uses included firing spheromaks at metal targets to generate intense X-ray flashes as a backlighting source for other experiments.[18]In the late 1990s spheromak concepts were applied towards the study of fundamental plasma physics, notably magnetic reconnection.[18] Dual-spheromak machines were built at the University of Tokyo, Princeton (MRX) and Swarthmore College.Rebirth in MFE

Then, in 1994, fusion history repeated itself. T. Kenneth Fowler was summarizing the results from CTX's experimental runs in the 1980s when he noticed that confinement time was proportional to the plasma temperature.[18] This was unexpected; the ideal gas law generally states that higher temperatures in a given confinement area leads to higher density and pressure. In conventional devices such as the tokamak, this increased temperature/pressure increases turbulence that dramatically lowers confinement time. If the spheromak really did give improved confinement with increased temperature, this would be enormously important. A series of similar papers followed, all of which suggested that there might be a "fast path" to an ignition-level spheromak reactor.[22][23]The promise was so great that several new MFE experiments started to study these issues. Notable among these is the Sustained Spheromak Physics Experiment (SSPX) at Lawrence Livermore National Laboratory, which is studying the problems of generating long-life spheromaks through electrostatic injection of additional helicity.[24]

Theory

Force free plasma vortices have uniform magnetic helicity and therefore are stable against many disruptions. Typically, the current decays faster in the colder regions until the gradient in helicity is large enough to allow a turbulent redistribution of the current.Force free vortices follow the following equations.

forces are everywhere zero. For a laboratory plasma α is a constant and β is a scalar function of spatial coordinates.

forces are everywhere zero. For a laboratory plasma α is a constant and β is a scalar function of spatial coordinates.Note that, unlike most plasma structures, the Lorentz force and the Magnus force,

, play equivalent roles.

, play equivalent roles.  is the mass density.