From Wikipedia, the free encyclopedia

Interstellar space travel is manned or unmanned travel between stars. The concept of interstellar travel via starships is a staple of science fiction. Interstellar travel is conceptually much more difficult than interplanetary travel. The distance between the planets in the Solar System is typically measured in standard astronomical units, while the distance between the stars is hundreds of thousands of AU and often expressed in light years. Intergalactic travel, or travel between different galaxies, would be even more difficult.A variety of concepts have been discussed in the literature, since the first astronautical pioneers, such as Konstantin Tsiolkovsky, Robert Esnault-Pelterie and Robert Hutchings Goddard. Given sufficient travel time and engineering work, both unmanned and sleeper ship interstellar travel requires no break-through physics to be achieved, but considerable technological and economic challenges need to be met. NASA, ESA and other space agencies have been engaging in research into these topics for decades, and have accumulated a number of theoretical approaches.

Contents

Difficulties

The main challenge facing interstellar travel is the immense distances between the stars. This means both great speed and a long travel time are required. The time required by propulsion methods based on currently known physical principles would require years to millennia. Hence an interstellar ship would face manifold hazards found in interplanetary travel, including vacuum, radiation, weightlessness, and micrometeoroids. Even the minimum multi-year travel times to the nearest stars are beyond current manned space mission design experience. The fundamental limits of spacetime present another challenge. The distances between stars isn't a problem in and of itself. Virtually all the material that would pose a problem is in our solar system along the disk that contains the planets, asteroid belt, Oort cloud, comets, free asteroids, macro and micro-meteroids, etc. So any device or projectile must be sent in a direction opposite of all of this material. The larger the object humans send, the greater the chances of it hitting something or vice versa. One option is to project something very small where the chance of it striking or being struck by something is virtually non-existent in the vacuum of interplanetary and interstellar space.[1][2]Required energy

A significant factor contributing to the difficulty is the energy which must be supplied to obtain a reasonable travel time. A lower bound for the required energy is the kinetic energy K = ½ mv2 where m is the final mass. If deceleration on arrival is desired and cannot be achieved by any means other than the engines of the ship, then the required energy at least doubles, because the energy needed to halt the ship equals the energy needed to accelerate it to travel speed.The velocity for a manned round trip of a few decades to even the nearest star is several thousand times greater than those of present space vehicles. This means that due to the v2 term in the kinetic energy formula, millions of times as much energy is required. Accelerating one ton to one-tenth of the speed of light requires at least 450 PJ or 4.5 ×1017 J or 125 billion kWh, without factoring in efficiency of the propulsion mechanism. This energy has to be either generated on-board from stored fuel, harvested from the interstellar medium, or projected over immense distances.

The energy requirements make interstellar travel very difficult. It has been reported that at the 2008 Joint Propulsion Conference, multiple experts opined that it was improbable that humans would ever explore beyond the Solar System.[3] Brice N. Cassenti, an associate professor with the Department of Engineering and Science at Rensselaer Polytechnic Institute, stated that at least the total energy output of the entire world [in a given year] would be required to send a probe to the nearest star.[3]

Interstellar medium

A major issue with traveling at extremely high speeds is that interstellar dust and gas may cause considerable damage to the craft, due to the high relative speeds and large kinetic energies involved. Various shielding methods to mitigate this problem have been proposed.[citation needed] Larger objects (such as macroscopic dust grains) are far less common, but would be much more destructive. The risks of impacting such objects, and methods of mitigating these risks, have been discussed in the literature, but many unknowns remain.[citation needed]Travel time

It has been argued that an interstellar mission which cannot be completed within 50 years should not be started at all. Instead, assuming that a civilization is still on an increasing curve of propulsion system velocity, not yet having reached the limit, the resources should be invested in designing a better propulsion system. This is because a slow spacecraft would probably be passed by another mission sent later with more advanced propulsion (Incessant Obsolescence Postulate).[4] On the other hand, Andrew Kennedy has shown that if one calculates the journey time to a given destination as the rate of travel speed derived from growth (even exponential growth) increases, there is a clear minimum in the total time to that destination from now (see wait calculation).[5] Voyages undertaken before the minimum will be overtaken by those who leave at the minimum, while those who leave after the minimum will never overtake those who left at the minimum.One argument against the stance of delaying a start until reaching fast propulsion system velocity is that the various other non-technical problems that are specific to long-distance travel at considerably higher speed (such as interstellar particle impact, possible dramatic shortening of average human life span during extended space residence, etc.) may remain obstacles that take much longer time to resolve than the propulsion issue alone, assuming that they can even be solved eventually at all. A case can therefore be made for starting a mission without delay, based on the concept of an achievable and dedicated but relatively slow interstellar mission using the current technological state-of-the-art and at relatively low cost, rather than banking on being able to solve all problems associated with a faster mission without having a reliable time frame for achievability of such.

Intergalactic travel involves distances about a million-fold greater than interstellar distances, making it radically more difficult than even interstellar travel.

Interstellar distances

Astronomical distances are often measured in the time it would take a beam of light to travel between two points (see light-year). Light in a vacuum travels approximately 300,000 kilometers per second or 186,000 miles per second.The distance from Earth to the Moon is 1.3 light-seconds. With current spacecraft propulsion technologies, a craft can cover the distance from the Earth to the Moon in around eight hours (New Horizons). That means light travels approximately thirty thousand times faster than current spacecraft propulsion technologies. The distance from Earth to other planets in the Solar System ranges from three light-minutes to about four light-hours. Depending on the planet and its alignment to Earth, for a typical unmanned spacecraft these trips will take from a few months to a little over a decade.[citation needed]

The nearest known star to the Sun is Proxima Centauri, which is 4.23 light-years away. However, there may be undiscovered brown dwarf systems that are closer.[6] The fastest outward-bound spacecraft yet sent, Voyager 1, has covered 1/600th of a light-year in 30 years and is currently moving at 1/18,000th the speed of light. At this rate, a journey to Proxima Centauri would take 80,000 years.[7] Of course, this mission was not specifically intended to travel fast to the stars, and current technology could do much better. The travel time could be reduced to a millennium using solar sails, or to a century or less using nuclear pulse propulsion. A better understanding of the vastness of the interstellar distance to one of the closest stars to the sun, Alpha Centauri A (a Sun-like star), can be obtained by scaling down the Earth-Sun distance (~150,000,000 km) to one meter. On this scale the distance to Alpha Centauri A would still be 271 kilometers or about 169 miles.

However, more speculative approaches to interstellar travel offer the possibility of circumventing these difficulties. Special relativity offers the possibility of shortening the travel time: if a starship with sufficiently advanced engines could reach velocities approaching the speed of light, relativistic time dilation would make the voyage much shorter for the traveler. However, it would still take many years of elapsed time as viewed by the people remaining on Earth, and upon returning to Earth, the travelers would find that far more time had elapsed on Earth than had for them. (For more on this effect, see twin paradox.)

General relativity offers the theoretical possibility that faster-than-light travel may be possible without violating fundamental laws of physics, for example, through wormholes, although it is still debated whether this is possible, in part, because of causality concerns. Proposed mechanisms for faster-than-light travel within the theory of general relativity require the existence of exotic matter.

Communications

The round-trip delay time is the minimum time between an observation by the probe and the moment the probe can receive instructions from Earth reacting to the observation. Given that information can travel no faster than the speed of light, this is for the Voyager 1 about 17 hours, near Proxima Centauri it would be 8 years. Faster reaction would have to be programmed to be carried out automatically. Of course, in the case of a manned flight the crew can respond immediately to their observations. However, the round-trip delay time makes them not only extremely distant from, but, in terms of communication, also extremely isolated from Earth (analogous to how past long distance explorers were similarly isolated before the invention of the electrical telegraph).Interstellar communication is still problematic — even if a probe could reach the nearest star, its ability to communicate back to Earth would be difficult given the extreme distance. See Interstellar communication.

Prime targets for interstellar travel

There are 59 known stellar systems within 20 light years from the Sun, containing 81 visible stars.Manned missions

The mass of any craft capable of carrying humans would inevitably be substantially larger than that necessary for an unmanned interstellar probe. For instance, the first space probe, Sputnik 1, had a payload of 83.6 kg, while spacecraft to carry a living passenger (Laika the dog), Sputnik 2, had a payload six times that at 508.3 kg. This underestimates the difference in the case of interstellar missions, given the vastly greater travel times involved and the resulting necessity of a closed-cycle life support system. As technology continues to advance, combined with the aggregate risks and support requirements of manned interstellar travel, the first interstellar missions are unlikely to carry earthly life forms.A manned craft will require more time to reach its top speed as humans have limited tolerance to acceleration.

Time dilation

Main article: Time dilation

Assuming one can not travel faster than light, one might conclude

that a human can never make a round-trip further from the Earth than 40

light years if the traveler is active between the ages of 20 and 60. So a

traveler would never be able to reach more than the very few star

systems which exist within the limit of 10–20 light years from the

Earth.But that would be a mistaken conclusion because it fails to take into account time dilation. Informally explained, clocks aboard ship run slower than Earth clocks, so if the ship engines are powerful enough the ship can reach mostly anywhere in the galaxy and go back to Earth within 40 years ship-time. The problem is that there is a difference between the time elapsed in the astronaut's ship and the time elapsed on Earth.

An example will make this clearer. Suppose a spaceship travels to a star 32 light years away. First it accelerates at a constant 1.03g (i.e., 10.1 m/s2) for 1.32 years (ship time). Then it stops the engines and coasts for the next 17.3 years (ship time) at a constant speed. Then it decelerates again for 1.32 ship-years so as to come at a stop at the destination. The astronaut takes a look around and comes back to Earth the same way.

After the full round-trip, the clocks on board the ship show that 40 years have passed, but according to Earth calendar the ship comes back 76 years after launch.

So, the overall average speed is 0.84 lightyears per earth year, or 1.6 lightyears per ship year. This is possible because at a speed of 0.87 c, time on board the ship seems to run slower. Every two Earth years, ship clocks advance 1 year.

From the viewpoint of the astronaut, onboard clocks seem to be running normally. The star ahead seems to be approaching at a speed of 0.87 lightyears per ship year. As all the universe looks contracted along the direction of travel to half the size it had when the ship was at rest, the distance between that star and the Sun seems to be 16 light years as measured by the astronaut, so it's no wonder that the trip at 0.87 ly per shipyear takes 20 ship years.

At higher speeds, the time onboard will run even slower, so the astronaut could travel to the center of the Milky Way (30 kly from Earth) and back in 40 years ship-time. But the speed according to Earth clocks will always be less than 1 lightyear per Earth year, so, when back home, the astronaut will find that 60 thousand years will have passed on Earth.

Constant acceleration

Regardless of how it is achieved, if a propulsion system can produce 1 g of acceleration continuously from departure to destination, then this will be the fastest method of travel. If the propulsion system drives the ship faster and faster for the first half of the journey, then turns around and brakes the craft so that it arrives at the destination at a standstill, this is a constant acceleration journey. This would also have the advantage of producing constant gravity.From the planetary observer perspective the ship will appear to steadily accelerate but more slowly as it approaches the speed of light. The ship will be close to the speed of light after about a year of accelerating and remain at that speed until it brakes for the end of the journey.

From the ship perspective there will be no top limit on speed – the ship keeps going faster and faster the whole first half. This happens because the ship's time sense slows down – relative to the planetary observer – the more it approaches the speed of light.

The result is an impressively fast journey if you are in the ship. Here is a table of journey times, in years, for various constant accelerations.

| Destination | 1g | 2g | 5g | 10g | Planetary time frame (all in years) |

|---|---|---|---|---|---|

| Alpha Centauri | 4 | 2.8 | 1.8 | 1.3 | 5 |

| Sirius | 7 | 5 | 3 | 2.2 | 13 |

| Galactic Core | 340 | 244 | 155 | 110 | 30,000 |

Proposed methods

Slow manned missions

Potential slow manned interstellar travel missions, based on current and near-future propulsion technologies are associated with trip times, starting from about one hundred years to thousands of years. The duration of a slow interstellar journey presents a major obstacle and existing concepts deal with this problem in different ways.[14] They can be distinguished by the "state" in which humans are transported on-board of the spacecraft.Generation ships

Main article: Generation ship

A generation ship (or world ship) is a type of interstellar ark

in which the crew which arrives at the destination is descended from

those who started the journey. Generation ships are not currently

feasible because of the difficulty of constructing a ship of the

enormous required scale and the great biological and sociological

problems that life aboard such a ship raises.[15][16][17][18]Suspended animation

Scientists and writers have postulated various techniques for suspended animation. These include human hibernation and cryonic preservation. While neither is currently practical, they offer the possibility of sleeper ships in which the passengers lie inert for the long years of the voyage.[19]Extended human lifespan

A variant on this possibility is based on the development of substantial human life extension, such as the "Strategies for Engineered Negligible Senescence" proposed by Dr. Aubrey de Grey. If a ship crew had lifespans of some thousands of years, or had artificial bodies, they could traverse interstellar distances without the need to replace the crew in generations. The psychological effects of such an extended period of travel would potentially still pose a problem.Frozen embryos

Main article: Embryo space colonization

A robotic space mission carrying some number of frozen early stage human embryos is another theoretical possibility. This method of space colonization requires, among other things, the development of a method to replicate conditions in a uterus, the prior detection of a habitable terrestrial planet, and advances in the field of fully autonomous mobile robots and educational robots which would replace human parents.[20]Island hopping through interstellar space

Interstellar space is not completely empty; it contains trillions of icy bodies ranging from small asteroids (Oort cloud) to possible rogue planets. There may be ways to take advantage of these resources for a good part of an interstellar trip, slowly hopping from body to body or setting up waystations along the way.[21]Faster manned missions

If a spaceship could average 10 percent of light speed (and decelerate at the destination, for manned missions), this would be enough to reach Proxima Centauri in forty years. Several propulsion concepts are proposed that might be eventually developed to accomplish this (see section below on propulsion methods), but none of them are ready for near-term (few decades) development at acceptable cost.[citation needed]Unmanned

Nanoprobes

Near-lightspeed nanospacecraft might be possible within the near future built on existing microchip technology with a newly developed nanoscale thruster. Researchers at the University of Michigan are developing thrusters that use nanoparticles as propellant. Their technology is called “nanoparticle field extraction thruster”, or nanoFET. These devices act like small particle accelerators shooting conductive nanoparticles out into space.[22]Given the light weight of these probes, it would take much less energy to accelerate them. With on board solar cells they could continually accelerate using solar power. One can envision a day when a fleet of millions or even billions of these particles swarm to distant stars at nearly the speed of light, while relaying signals back to earth through a vast interstellar communication network.

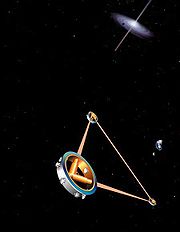

Ships with an external energy source

There are projects ships supplying energy from external sources such as using laser. Thus reduced mass of the ship due to the lack of energy system on board the ship that makes it easier and allows us to develop more speed. Geoffrey A. Landis proposed for interstellar travel future-technology project interstellar probe with supplying the energy from an external source (laser of base station) and Ion thruster.[23][24][25][26]Propulsion

Rocket concepts

All rocket concepts are limited by the rocket equation, which sets the characteristic velocity available as a function of exhaust velocity and mass ratio, the ratio of initial (M0, including fuel) to final (M1, fuel depleted) mass.Very high specific power, the ratio of jet-power to total vehicle mass, is required to reach interstellar targets within sub-century time-frames.[27] Some heat transfer is inevitable and a tremendous heating load must be adequately handled.

Thus, for interstellar rocket concepts of all technologies, a key engineering problem (seldom explicitly discussed) is limiting the heat transfer from the exhaust stream back into the vehicle.[28]

Nuclear fission powered

Fission-electric

Nuclear-electric or plasma engines, operating for long periods at low thrust and powered by fission reactors, have the potential to reach speeds much greater than chemically powered vehicles or nuclear-thermal rockets. Such vehicles probably have the potential to power Solar System exploration with reasonable trip times within the current century. Because of their low-thrust propulsion, they would be limited to off-planet, deep-space operation. Electrically powered spacecraft propulsion powered by a portable power-source, say a nuclear reactor, producing only small cravings, a lot of weight needed to convert nuclear energy into electrical equipment and as a consequence low accelerations, would take centuries to reach for example 15% of the velocity of light, thus unsuitable for interstellar flight in during a one human lifetime.[29][30][31]Fission-fragment

Fission-fragment rockets use nuclear fission to create high-speed jets of fission fragments, which are ejected at speeds of up to 12,000 km/s. With fission, the energy output is approximately 0.1% of the total mass-energy of the reactor fuel and limits the effective exhaust velocity to about 5% of the velocity of light. For maximum velocity, the reaction mass should optimally consist of fission products, the "ash" of the primary energy source, in order that no extra reaction mass need be book-kept in the mass ratio. This is known as a fission-fragment rocket. thermal-propulsion engines such as NERVA produce sufficient thrust, but can only achieve relatively low-velocity exhaust jets, so to accelerate to the desired speed would require an enormous amount of fuel.[29][30][31]Nuclear pulse

Main article: Nuclear pulse propulsion

Based on work in the late 1950s to the early 1960s, it has been technically possible to build spaceships with nuclear pulse propulsion engines, i.e. driven by a series of nuclear explosions. This propulsion system contains the prospect of very high specific impulse (space travel's equivalent of fuel economy) and high specific power.[32]Project Orion team member, Freeman Dyson, proposed in 1968 an interstellar spacecraft using nuclear pulse propulsion which used pure deuterium fusion detonations with a very high fuel-burnup fraction. He computed an exhaust velocity of 15,000 km/s and a 100,000 tonne space-vehicle able to achieve a 20,000 km/s delta-v allowing a flight-time to Alpha Centauri of 130 years.[33] Later studies indicate that the top cruise velocity that can theoretically be achieved by a Teller-Ulam thermonuclear unit powered Orion starship, assuming no fuel is saved for slowing back down, is about 8% to 10% of the speed of light (0.08-0.1c).[34] An atomic (fission) Orion can achieve perhaps 3%-5% of the speed of light. A nuclear pulse drive starship powered by Fusion-antimatter catalyzed nuclear pulse propulsion units would be similarly in the 10% range and pure Matter-antimatter annihilation rockets would be theoretically capable of obtaining a velocity between 50% to 80% of the speed of light. In each case saving fuel for slowing down halves the max. speed. The concept of using a magnetic sail to decelerate the spacecraft as it approaches its destination has been discussed as an alternative to using propellant, this would allow the ship to travel near the maximum theoretical velocity.[35] Alternative designs utilizing similar principles include Project Longshot, Project Daedalus, and Mini-Mag Orion. The principle of external nuclear pulse propulsion to maximize survivable power has remained common among serious concepts for interstellar flight without external power beaming and for very high-performance interplanetary flight.

In the 1970s the Nuclear Pulse Propulsion concept further was refined by Project Daedalus by use of externally triggered inertial confinement fusion, in this case producing fusion explosions via compressing fusion fuel pellets with high-powered electron beams. Since then lasers, ion beams, neutral particle beams and hyper-kinetic projectiles have been suggested to produce nuclear pulses for propulsion purposes.[36]

A current impediment to the development of any nuclear explosive powered spacecraft is the 1963 Partial Test Ban Treaty which includes a prohibition on the detonation of any nuclear devices (even non-weapon based) in outer space. This treaty would therefore need to be re-negotiated, although a project on the scale of an interstellar mission using currently foreseeable technology would probably require international co-operation on at least the scale of the International Space Station.

Nuclear fusion rockets

Fusion rocket starships, powered by nuclear fusion reactions, should conceivably be able to reach speeds of the order of 10% of that of light, based on energy considerations alone. In theory, a large number of stages could push a vehicle arbitrarily close to the speed of light.[37] These would "burn" such light element fuels as deuterium, tritium, 3He, 11B, and 7Li. Because fusion yields about 0.3–0.9% of the mass of the nuclear fuel as released energy, it is energetically more favorable than fission, which releases <0.1% of the fuel's mass-energy. The maximum exhaust velocities potentially energetically available are correspondingly higher than for fission, typically 4–10% of c. However, the most easily achievable fusion reactions release a large fraction of their energy as high-energy neutrons, which are a significant source of energy loss. Thus, while these concepts seem to offer the best (nearest-term) prospects for travel to the nearest stars within a (long) human lifetime, they still involve massive technological and engineering difficulties, which may turn out to be intractable for decades or centuries.Early studies include Project Daedalus, performed by the British Interplanetary Society in 1973–1978, and Project Longshot, a student project sponsored by NASA and the US Naval Academy, completed in 1988. Another fairly detailed vehicle system, "Discovery II",[38] designed and optimized for crewed Solar System exploration, based on the D3He reaction but using hydrogen as reaction mass, has been described by a team from NASA's Glenn Research Center. It achieves characteristic velocities of >300 km/s with an acceleration of ~1.7•10−3 g, with a ship initial mass of ~1700 metric tons, and payload fraction above 10%. While these are still far short of the requirements for interstellar travel on human timescales, the study seems to represent a reasonable benchmark towards what may be approachable within several decades, which is not impossibly beyond the current state-of-the-art. Based on the concept's 2.2% burnup fraction it could achieve a pure fusion product exhaust velocity of ~3,000 km/s.

Antimatter rockets

An antimatter rocket would have a far higher energy density and specific impulse than any other proposed class of rocket. If energy resources and efficient production methods are found to make antimatter in the quantities required and store it safely, it would be theoretically possible to reach speeds approaching that of light. Then relativistic time dilation would become more noticeable, thus making time pass at a slower rate for the travelers as perceived by an outside observer, reducing the trip time experienced by human travelers.Supposing the production and storage of antimatter should become practical, two further problems would present and need to be solved. First, in the annihilation of antimatter, much of the energy is lost in very penetrating high-energy gamma radiation, and especially also in neutrinos, so that substantially less than mc2 would actually be available if the antimatter were simply allowed to annihilate into radiations thermally. Even so, the energy available for propulsion would probably be substantially higher than the ~1% of mc2 yield of nuclear fusion, the next-best rival candidate.

Second, once again heat transfer from exhaust to vehicle seems likely to deposit enormous wasted energy into the ship, considering the large fraction of the energy that goes into penetrating gamma rays. Even assuming biological shielding were provided to protect the passengers, some of the energy would inevitably heat the vehicle, and may thereby prove limiting. This requires consideration for serious proposals if useful accelerations are to be achieved, as the energies involved (e.g., for 0.1g ship acceleration, approaching 0.3 trillion watts per ton of ship mass) are very large.

Recently Friedwardt Winterberg has suggested a means of converting an imploding matter-antimatter plasma into a highly collimated beam of gamma-rays - effectively a gamma-ray laser - which would very efficiently transfer thrust to the space vehicle's structure via a variant of the Mössbauer effect.[39] Such a system, if antimatter production can be made efficient, would then be a very effective photon rocket, as originally envisaged by Eugen Sanger.

[40]

Non-rocket concepts

A problem with all traditional rocket propulsion methods is that the spacecraft would need to carry its fuel with it, thus making it very massive, in accordance with the rocket equation. Some concepts attempt to escape from this problem ([41]):Interstellar ramjets

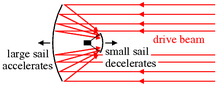

In 1960, Robert W. Bussard proposed the Bussard ramjet, a fusion rocket in which a huge scoop would collect the diffuse hydrogen in interstellar space, "burn" it on the fly using a proton–proton fusion reaction, and expel it out of the back. Though later calculations with more accurate estimates suggest that the thrust generated would be less than the drag caused by any conceivable scoop design, the idea is attractive because, as the fuel would be collected en route (commensurate with the concept of energy harvesting), the craft could theoretically accelerate to near the speed of light.Beamed propulsion

A magnetic sail could also decelerate at its destination without depending on carried fuel or a driving beam in the destination system, by interacting with the plasma found in the solar wind of the destination star and the interstellar medium.[44] Unlike Forward's light sail scheme, this would not require the action of the particle beam used for launching the craft. Alternatively, a magnetic sail could be pushed by a particle beam[45] or a plasma beam[46] to reach high velocity, as proposed by Landis and Winglee.

Beamed propulsion seems to be the best interstellar travel technique presently available, since it uses known physics and known technology that is being developed for other purposes,[8] and would be considerably cheaper than nuclear pulse propulsion.[citation needed]

Pre-accelerated fuel

Achieving start-stop interstellar trip times of less than a human lifetime require mass-ratios of between 1,000 and 1,000,000, even for the nearer stars. This could be achieved by multi-staged vehicles on a vast scale.[37] Alternatively large linear accelerators could propel fuel to fission propelled space-vehicles, avoiding the limitations of the Rocket equation.[48]Speculative methods

Hawking radiation rockets

In a black hole starship, a parabolic reflector would reflect Hawking radiation from an artificial black hole. In 2009, Louis Crane and Shawn Westmoreland of Kansas State University published a paper investigating the feasibility of this idea. Their conclusion was that it was on the edge of possibility, but that quantum gravity effects that are presently unknown may make it easier or make it impossible.[49][50]Magnetic monopole rockets

If some of the Grand unification models are correct, e.g. 't Hooft–Polyakov, it would be possible to construct a photonic engine that uses no antimatter thanks to the magnetic monopole which hypothetically can catalyze decay of a proton to a positron and π0-meson:[51][52]A magnetic monopole engine could also work on a once-through scheme such as the Bussard ramjet (see below).

At the same time, most of the modern Grand unification theories such as M-theory predict no magnetic monopoles, which casts doubt on this attractive idea.

By transmission

Main article: Teleportation

If physical entities could be transmitted as information and

reconstructed at a destination, travel at nearly the speed of light

would be possible, which for the "travelers" would be instantaneous.

However, sending an atom-by-atom description of (say) a human body would

be a daunting task. Extracting and sending only a computer brain simulation

is a significant part of that problem. "Journey" time would be the

light-travel time plus the time needed to encode, send and reconstruct

the whole transmission.[53]Faster-than-light travel

Main article: Faster-than-light

Scientists and authors have postulated a number of ways by which it

might be possible to surpass the speed of light. Even the most

serious-minded of these are speculative.According to Einstein's equation of general relativity, spacetime is curved:

In physics, the Alcubierre drive is based on an argument that the curvature could take the form of a wave in which a spaceship might be carried in a "bubble". Space would be collapsing at one end of the bubble and expanding at the other end. The motion of the wave would carry a spaceship from one space point to another in less time than light would take through unwarped space. Nevertheless, the spaceship would not be moving faster than light within the bubble. This concept would require the spaceship to incorporate a region of exotic matter, or "negative mass".

Wormholes are conjectural distortions in spacetime that theorists postulate could connect two arbitrary points in the universe, across an Einstein–Rosen Bridge. It is not known whether wormholes are possible in practice. Although there are solutions to the Einstein equation of general relativity which allow for wormholes, all of the currently known solutions involve some assumption, for example the existence of negative mass, which may be unphysical.[55] However, Cramer et al. argue that such wormholes might have been created in the early universe, stabilized by cosmic string.[56] The general theory of wormholes is discussed by Visser in the book Lorentzian Wormholes.[57]

Designs and studies

Project Hyperion

Project Hyperion, one of the projects of Icarus Interstellar.[58]Enzmann starship

Main article: Enzmann starship

The Enzmann starship, as detailed by G. Harry Stine in the October 1973 issue of Analog, was a design for a future starship, based on the ideas of Dr. Robert Duncan-Enzmann.[59] The spacecraft itself as proposed used a 12,000,000 ton ball of frozen deuterium to power 12–24 thermonuclear pulse propulsion units.[59] Twice as long as the Empire State Building and assembled in-orbit, the spacecraft was part of a larger project preceded by interstellar probes and telescopic observation of target star systems.[59][60]NASA research

NASA has been researching interstellar travel since its formation, translating important foreign language papers and conducting early studies on applying fusion propulsion, in the 1960s, and laser propulsion, in the 1970s, to interstellar travel.The NASA Breakthrough Propulsion Physics Program (terminated in FY 2003 after 6-year, $1.2 million study, as "No breakthroughs appear imminent.")[61] identified some breakthroughs which are needed for interstellar travel to be possible.[62]

Geoffrey A. Landis of NASA's Glenn Research Center states that a laser-powered interstellar sail ship could possibly be launched within 50 years, using new methods of space travel. "I think that ultimately we're going to do it, it's just a question of when and who," Landis said in an interview. Rockets are too slow to send humans on interstellar missions. Instead, he envisions interstellar craft with extensive sails, propelled by laser light to about one-tenth the speed of light. It would take such a ship about 43 years to reach Alpha Centauri, if it passed through the system. Slowing down to stop at Alpha Centauri could increase the trip to 100 years,[63] while a journey without slowing down raises the issue of making sufficiently accurate and useful observations and measurements during a fly-by.

Hundred-Year Starship study

The 100 Year Starship (100YSS) is the name of the overall effort that will, over the next century, work toward achieving interstellar travel. The effort will also go by the moniker 100YSS. The 100 Year Starship study is the name of a one year project to assess the attributes of and lay the groundwork for an organization that can carry forward the 100 Year Starship vision.Dr. Harold ("Sonny") White[64] from NASA's Johnson Space Center is a member of Icarus Interstellar,[65] the nonprofit foundation whose mission is to realize interstellar flight before the year 2100. At the 2012 meeting of 100YSS, he reported using a laser to try to warp spacetime by 1 part in 10 million with the aim of helping to make interstellar travel possible.[66]

See also

- Interstellar communication

- Starwisp

- Interstellar travel in fiction

- Health threat from cosmic rays

- Kardashev scale

- List of nearest terrestrial exoplanets

- List of plasma (physics) articles

- Hundred-Year Starship

General relativity

From Wikipedia, the free encyclopedia

For a more accessible and less technical introduction to this topic, see Introduction to general relativity.

A simulated black hole of 10 solar masses as seen from a distance of 600 kilometers with the Milky Way in the background.

Some predictions of general relativity differ significantly from those of classical physics, especially concerning the passage of time, the geometry of space, the motion of bodies in free fall, and the propagation of light. Examples of such differences include gravitational time dilation, gravitational lensing, the gravitational redshift of light, and the gravitational time delay. The predictions of general relativity have been confirmed in all observations and experiments to date. Although general relativity is not the only relativistic theory of gravity, it is the simplest theory that is consistent with experimental data. However, unanswered questions remain, the most fundamental being how general relativity can be reconciled with the laws of quantum physics to produce a complete and self-consistent theory of quantum gravity.

Einstein's theory has important astrophysical implications. For example, it implies the existence of black holes—regions of space in which space and time are distorted in such a way that nothing, not even light, can escape—as an end-state for massive stars. There is ample evidence that the intense radiation emitted by certain kinds of astronomical objects is due to black holes; for example, microquasars and active galactic nuclei result from the presence of stellar black holes and black holes of a much more massive type, respectively. The bending of light by gravity can lead to the phenomenon of gravitational lensing, in which multiple images of the same distant astronomical object are visible in the sky. General relativity also predicts the existence of gravitational waves, which have since been observed indirectly; a direct measurement is the aim of projects such as LIGO and NASA/ESA Laser Interferometer Space Antenna and various pulsar timing arrays. In addition, general relativity is the basis of current cosmological models of a consistently expanding universe.

Contents

[hide]- 1 History

- 2 From classical mechanics to general relativity

- 3 Definition and basic applications

- 4 Consequences of Einstein's theory

- 5 Astrophysical applications

- 6 Advanced concepts

- 7 Relationship with quantum theory

- 8 Current status

- 9 See also

- 10 Notes

- 11 References

- 12 Further reading

- 13 External links

History[edit]

Main articles: History of general relativity and Classical theories of gravitation

The Einstein field equations are nonlinear and very difficult to solve. Einstein used approximation methods in working out initial predictions of the theory. But as early as 1916, the astrophysicist Karl Schwarzschild found the first non-trivial exact solution to the Einstein field equations, the so-called Schwarzschild metric. This solution laid the groundwork for the description of the final stages of gravitational collapse, and the objects known today as black holes. In the same year, the first steps towards generalizing Schwarzschild's solution to electrically charged objects were taken, which eventually resulted in the Reissner–Nordström solution, now associated with electrically charged black holes.[3] In 1917, Einstein applied his theory to the universe as a whole, initiating the field of relativistic cosmology. In line with contemporary thinking, he assumed a static universe, adding a new parameter to his original field equations—the cosmological constant—to match that observational presumption.[4] By 1929, however, the work of Hubble and others had shown that our universe is expanding. This is readily described by the expanding cosmological solutions found by Friedmann in 1922, which do not require a cosmological constant. Lemaître used these solutions to formulate the earliest version of the Big Bang models, in which our universe has evolved from an extremely hot and dense earlier state.[5] Einstein later declared the cosmological constant the biggest blunder of his life.[6]

During that period, general relativity remained something of a curiosity among physical theories. It was clearly superior to Newtonian gravity, being consistent with special relativity and accounting for several effects unexplained by the Newtonian theory. Einstein himself had shown in 1915 how his theory explained the anomalous perihelion advance of the planet Mercury without any arbitrary parameters ("fudge factors").[7] Similarly, a 1919 expedition led by Eddington confirmed general relativity's prediction for the deflection of starlight by the Sun during the total solar eclipse of May 29, 1919,[8] making Einstein instantly famous.[9] Yet the theory entered the mainstream of theoretical physics and astrophysics only with the developments between approximately 1960 and 1975, now known as the golden age of general relativity.[10] Physicists began to understand the concept of a black hole, and to identify quasars as one of these objects' astrophysical manifestations.[11] Ever more precise solar system tests confirmed the theory's predictive power,[12] and relativistic cosmology, too, became amenable to direct observational tests.[13]

From classical mechanics to general relativity[edit]

General relativity can be understood by examining its similarities with and departures from classical physics. The first step is the realization that classical mechanics and Newton's law of gravity admit a geometric description. The combination of this description with the laws of special relativity results in a heuristic derivation of general relativity.[14]Geometry of Newtonian gravity[edit]

According to general relativity, objects in a gravitational field behave

similarly to objects within an accelerating enclosure. For example, an

observer will see a ball fall the same way in a rocket (left) as it does

on Earth (right), provided that the acceleration of the rocket is equal

to 9.8 m/s2 (the acceleration due to gravity at the surface of the Earth).

Conversely, one might expect that inertial motions, once identified by observing the actual motions of bodies and making allowances for the external forces (such as electromagnetism or friction), can be used to define the geometry of space, as well as a time coordinate. However, there is an ambiguity once gravity comes into play. According to Newton's law of gravity, and independently verified by experiments such as that of Eötvös and its successors (see Eötvös experiment), there is a universality of free fall (also known as the weak equivalence principle, or the universal equality of inertial and passive-gravitational mass): the trajectory of a test body in free fall depends only on its position and initial speed, but not on any of its material properties.[17] A simplified version of this is embodied in Einstein's elevator experiment, illustrated in the figure on the right: for an observer in a small enclosed room, it is impossible to decide, by mapping the trajectory of bodies such as a dropped ball, whether the room is at rest in a gravitational field, or in free space aboard an accelerating rocket generating a force equal to gravity.[18]

Given the universality of free fall, there is no observable distinction between inertial motion and motion under the influence of the gravitational force. This suggests the definition of a new class of inertial motion, namely that of objects in free fall under the influence of gravity. This new class of preferred motions, too, defines a geometry of space and time—in mathematical terms, it is the geodesic motion associated with a specific connection which depends on the gradient of the gravitational potential. Space, in this construction, still has the ordinary Euclidean geometry. However, spacetime as a whole is more complicated. As can be shown using simple thought experiments following the free-fall trajectories of different test particles, the result of transporting spacetime vectors that can denote a particle's velocity (time-like vectors) will vary with the particle's trajectory; mathematically speaking, the Newtonian connection is not integrable. From this, one can deduce that spacetime is curved. The result is a geometric formulation of Newtonian gravity using only covariant concepts, i.e. a description which is valid in any desired coordinate system.[19] In this geometric description, tidal effects—the relative acceleration of bodies in free fall—are related to the derivative of the connection, showing how the modified geometry is caused by the presence of mass.[20]

Relativistic generalization[edit]

As intriguing as geometric Newtonian gravity may be, its basis, classical mechanics, is merely a limiting case of (special) relativistic mechanics.[21] In the language of symmetry: where gravity can be neglected, physics is Lorentz invariant as in special relativity rather than Galilei invariant as in classical mechanics. (The defining symmetry of special relativity is the Poincaré group which also includes translations and rotations.) The differences between the two become significant when we are dealing with speeds approaching the speed of light, and with high-energy phenomena.[22]With Lorentz symmetry, additional structures come into play. They are defined by the set of light cones (see the image on the left). The light-cones define a causal structure: for each event A, there is a set of events that can, in principle, either influence or be influenced by A via signals or interactions that do not need to travel faster than light (such as event B in the image), and a set of events for which such an influence is impossible (such as event C in the image). These sets are observer-independent.[23] In conjunction with the world-lines of freely falling particles, the light-cones can be used to reconstruct the space–time's semi-Riemannian metric, at least up to a positive scalar factor. In mathematical terms, this defines a conformal structure.[24]

Special relativity is defined in the absence of gravity, so for practical applications, it is a suitable model whenever gravity can be neglected. Bringing gravity into play, and assuming the universality of free fall, an analogous reasoning as in the previous section applies: there are no global inertial frames. Instead there are approximate inertial frames moving alongside freely falling particles. Translated into the language of spacetime: the straight time-like lines that define a gravity-free inertial frame are deformed to lines that are curved relative to each other, suggesting that the inclusion of gravity necessitates a change in spacetime geometry.[25]

A priori, it is not clear whether the new local frames in free fall coincide with the reference frames in which the laws of special relativity hold—that theory is based on the propagation of light, and thus on electromagnetism, which could have a different set of preferred frames. But using different assumptions about the special-relativistic frames (such as their being earth-fixed, or in free fall), one can derive different predictions for the gravitational redshift, that is, the way in which the frequency of light shifts as the light propagates through a gravitational field (cf. below). The actual measurements show that free-falling frames are the ones in which light propagates as it does in special relativity.[26] The generalization of this statement, namely that the laws of special relativity hold to good approximation in freely falling (and non-rotating) reference frames, is known as the Einstein equivalence principle, a crucial guiding principle for generalizing special-relativistic physics to include gravity.[27]

The same experimental data shows that time as measured by clocks in a gravitational field—proper time, to give the technical term—does not follow the rules of special relativity. In the language of spacetime geometry, it is not measured by the Minkowski metric. As in the Newtonian case, this is suggestive of a more general geometry. At small scales, all reference frames that are in free fall are equivalent, and approximately Minkowskian. Consequently, we are now dealing with a curved generalization of Minkowski space. The metric tensor that defines the geometry—in particular, how lengths and angles are measured—is not the Minkowski metric of special relativity, it is a generalization known as a semi- or pseudo-Riemannian metric. Furthermore, each Riemannian metric is naturally associated with one particular kind of connection, the Levi-Civita connection, and this is, in fact, the connection that satisfies the equivalence principle and makes space locally Minkowskian (that is, in suitable locally inertial coordinates, the metric is Minkowskian, and its first partial derivatives and the connection coefficients vanish).[28]

Einstein's equations[edit]

Main articles: Einstein field equations and Mathematics of general relativity

Having formulated the relativistic, geometric version of the effects

of gravity, the question of gravity's source remains. In Newtonian

gravity, the source is mass. In special relativity, mass turns out to be

part of a more general quantity called the energy–momentum tensor, which includes both energy and momentumdensities as well as stress (that is, pressure and shear).[29]

Using the equivalence principle, this tensor is readily generalized to

curved space-time. Drawing further upon the analogy with geometric

Newtonian gravity, it is natural to assume that the field equation for gravity relates this tensor and the Ricci tensor,

which describes a particular class of tidal effects: the change in

volume for a small cloud of test particles that are initially at rest,

and then fall freely. In special relativity, conservation of energy–momentum corresponds to the statement that the energy–momentum tensor is divergence-free. This formula, too, is readily generalized to curved spacetime by replacing partial derivatives with their curved-manifold counterparts, covariant derivatives studied in differential geometry.

With this additional condition—the covariant divergence of the

energy–momentum tensor, and hence of whatever is on the other side of

the equation, is zero— the simplest set of equations are what are called

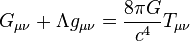

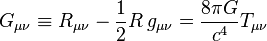

Einstein's (field) equations:Einstein's field equations

|

and the metric. Where

and the metric. Where  is symmetric. In particular,

is symmetric. In particular, is the energy–momentum tensor. All tensors are written in abstract index notation.[30] Matching the theory's prediction to observational results for planetary orbits

(or, equivalently, assuring that the weak-gravity, low-speed limit is

Newtonian mechanics), the proportionality constant can be fixed as κ =

8πG/c4, with G the gravitational constant and c the speed of light.[31] When there is no matter present, so that the energy–momentum tensor vanishes, the results are the vacuum Einstein equations,

is the energy–momentum tensor. All tensors are written in abstract index notation.[30] Matching the theory's prediction to observational results for planetary orbits

(or, equivalently, assuring that the weak-gravity, low-speed limit is

Newtonian mechanics), the proportionality constant can be fixed as κ =

8πG/c4, with G the gravitational constant and c the speed of light.[31] When there is no matter present, so that the energy–momentum tensor vanishes, the results are the vacuum Einstein equations,Definition and basic applications[edit]

The derivation outlined in the previous section contains all the information needed to define general relativity, describe its key properties, and address a question of crucial importance in physics, namely how the theory can be used for model-building.Definition and basic properties[edit]

General relativity is a metric theory of gravitation. At its core are Einstein's equations, which describe the relation between the geometry of a four-dimensional, pseudo-Riemannian manifold representing spacetime, and the energy–momentum contained in that spacetime.[33] Phenomena that in classical mechanics are ascribed to the action of the force of gravity (such as free-fall, orbital motion, and spacecraft trajectories), correspond to inertial motion within a curved geometry of spacetime in general relativity; there is no gravitational force deflecting objects from their natural, straight paths. Instead, gravity corresponds to changes in the properties of space and time, which in turn changes the straightest-possible paths that objects will naturally follow.[34] The curvature is, in turn, caused by the energy–momentum of matter. Paraphrasing the relativist John Archibald Wheeler, spacetime tells matter how to move; matter tells spacetime how to curve.[35]While general relativity replaces the scalar gravitational potential of classical physics by a symmetric rank-two tensor, the latter reduces to the former in certain limiting cases. For weak gravitational fields and slow speed relative to the speed of light, the theory's predictions converge on those of Newton's law of universal gravitation.[36]

As it is constructed using tensors, general relativity exhibits general covariance: its laws—and further laws formulated within the general relativistic framework—take on the same form in all coordinate systems.[37] Furthermore, the theory does not contain any invariant geometric background structures, i.e. it is background independent. It thus satisfies a more stringent general principle of relativity, namely that the laws of physics are the same for all observers.[38] Locally, as expressed in the equivalence principle, spacetime is Minkowskian, and the laws of physics exhibit local Lorentz invariance.[39]

Model-building[edit]

The core concept of general-relativistic model-building is that of a solution of Einstein's equations. Given both Einstein's equations and suitable equations for the properties of matter, such a solution consists of a specific semi-Riemannian manifold (usually defined by giving the metric in specific coordinates), and specific matter fields defined on that manifold. Matter and geometry must satisfy Einstein's equations, so in particular, the matter's energy–momentum tensor must be divergence-free. The matter must, of course, also satisfy whatever additional equations were imposed on its properties. In short, such a solution is a model universe that satisfies the laws of general relativity, and possibly additional laws governing whatever matter might be present.[40]Einstein's equations are nonlinear partial differential equations and, as such, difficult to solve exactly.[41] Nevertheless, a number of exact solutions are known, although only a few have direct physical applications.[42] The best-known exact solutions, and also those most interesting from a physics point of view, are the Schwarzschild solution, the Reissner–Nordström solution and the Kerr metric, each corresponding to a certain type of black hole in an otherwise empty universe,[43] and the Friedmann–Lemaître–Robertson–Walker and de Sitter universes, each describing an expanding cosmos.[44] Exact solutions of great theoretical interest include the Gödel universe (which opens up the intriguing possibility of time travel in curved spacetimes), the Taub-NUT solution (a model universe that is homogeneous, but anisotropic), and anti-de Sitter space (which has recently come to prominence in the context of what is called the Maldacena conjecture).[45]

Given the difficulty of finding exact solutions, Einstein's field equations are also solved frequently by numerical integration on a computer, or by considering small perturbations of exact solutions. In the field of numerical relativity, powerful computers are employed to simulate the geometry of spacetime and to solve Einstein's equations for interesting situations such as two colliding black holes.[46] In principle, such methods may be applied to any system, given sufficient computer resources, and may address fundamental questions such as naked singularities. Approximate solutions may also be found by perturbation theories such as linearized gravity[47] and its generalization, the post-Newtonian expansion, both of which were developed by Einstein. The latter provides a systematic approach to solving for the geometry of a spacetime that contains a distribution of matter that moves slowly compared with the speed of light. The expansion involves a series of terms; the first terms represent Newtonian gravity, whereas the later terms represent ever smaller corrections to Newton's theory due to general relativity.[48] An extension of this expansion is the parametrized post-Newtonian (PPN) formalism, which allows quantitative comparisons between the predictions of general relativity and alternative theories.[49]

Consequences of Einstein's theory[edit]

General relativity has a number of physical consequences. Some follow directly from the theory's axioms, whereas others have become clear only in the course of the ninety years of research that followed Einstein's initial publication.Gravitational time dilation and frequency shift[edit]

Main article: Gravitational time dilation

Gravitational redshift has been measured in the laboratory[52] and using astronomical observations.[53] Gravitational time dilation in the Earth's gravitational field has been measured numerous times using atomic clocks,[54] while ongoing validation is provided as a side effect of the operation of the Global Positioning System (GPS).[55] Tests in stronger gravitational fields are provided by the observation of binary pulsars.[56] All results are in agreement with general relativity.[57] However, at the current level of accuracy, these observations cannot distinguish between general relativity and other theories in which the equivalence principle is valid.[58]

Light deflection and gravitational time delay[edit]

This and related predictions follow from the fact that light follows what is called a light-like or null geodesic—a generalization of the straight lines along which light travels in classical physics. Such geodesics are the generalization of the invariance of lightspeed in special relativity.[60] As one examines suitable model spacetimes (either the exterior Schwarzschild solution or, for more than a single mass, the post-Newtonian expansion),[61] several effects of gravity on light propagation emerge. Although the bending of light can also be derived by extending the universality of free fall to light,[62] the angle of deflection resulting from such calculations is only half the value given by general relativity.[63]

Closely related to light deflection is the gravitational time delay (or Shapiro delay), the phenomenon that light signals take longer to move through a gravitational field than they would in the absence of that field. There have been numerous successful tests of this prediction.[64] In the parameterized post-Newtonian formalism (PPN), measurements of both the deflection of light and the gravitational time delay determine a parameter called γ, which encodes the influence of gravity on the geometry of space.[65]

Gravitational waves[edit]

Main article: Gravitational wave

or less. Data analysis methods routinely make use of the fact that these linearized waves can be Fourier decomposed.[68]

or less. Data analysis methods routinely make use of the fact that these linearized waves can be Fourier decomposed.[68]Some exact solutions describe gravitational waves without any approximation, e.g., a wave train traveling through empty space[69] or so-called Gowdy universes, varieties of an expanding cosmos filled with gravitational waves.[70] But for gravitational waves produced in astrophysically relevant situations, such as the merger of two black holes, numerical methods are presently the only way to construct appropriate models.[71]

Orbital effects and the relativity of direction[edit]

Main article: Kepler problem in general relativity

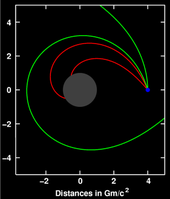

General relativity differs from classical mechanics in a number of

predictions concerning orbiting bodies. It predicts an overall rotation (precession)

of planetary orbits, as well as orbital decay caused by the emission of

gravitational waves and effects related to the relativity of direction.Precession of apsides[edit]

In general relativity, the apsides of any orbit (the point of the orbiting body's closest approach to the system's center of mass) will precess—the orbit is not an ellipse, but akin to an ellipse that rotates on its focus, resulting in a rose curve-like shape (see image). Einstein first derived this result by using an approximate metric representing the Newtonian limit and treating the orbiting body as a test particle. For him, the fact that his theory gave a straightforward explanation of the anomalous perihelion shift of the planet Mercury, discovered earlier by Urbain Le Verrier in 1859, was important evidence that he had at last identified the correct form of the gravitational field equations.[72]The effect can also be derived by using either the exact Schwarzschild metric (describing spacetime around a spherical mass)[73] or the much more general post-Newtonian formalism.[74] It is due to the influence of gravity on the geometry of space and to the contribution of self-energy to a body's gravity (encoded in the nonlinearity of Einstein's equations).[75] Relativistic precession has been observed for all planets that allow for accurate precession measurements (Mercury, Venus, and Earth),[76] as well as in binary pulsar systems, where it is larger by five orders of magnitude.[77]

Orbital decay[edit]

The first observation of a decrease in orbital period due to the emission of gravitational waves was made by Hulse and Taylor, using the binary pulsar PSR1913+16 they had discovered in 1974. This was the first detection of gravitational waves, albeit indirect, for which they were awarded the 1993 Nobel Prize in physics.[80] Since then, several other binary pulsars have been found, in particular the double pulsar PSR J0737-3039, in which both stars are pulsars.[81]

Geodetic precession and frame-dragging[edit]

Main articles: Geodetic precession and Frame dragging

Several relativistic effects are directly related to the relativity of direction.[82] One is geodetic precession: the axis direction of a gyroscope

in free fall in curved spacetime will change when compared, for

instance, with the direction of light received from distant stars—even

though such a gyroscope represents the way of keeping a direction as

stable as possible ("parallel transport").[83] For the Moon–Earth system, this effect has been measured with the help of lunar laser ranging.[84] More recently, it has been measured for test masses aboard the satellite Gravity Probe B to a precision of better than 0.3%.[85][86]Near a rotating mass, there are so-called gravitomagnetic or frame-dragging effects. A distant observer will determine that objects close to the mass get "dragged around". This is most extreme for rotating black holes where, for any object entering a zone known as the ergosphere, rotation is inevitable.[87] Such effects can again be tested through their influence on the orientation of gyroscopes in free fall.[88] Somewhat controversial tests have been performed using the LAGEOS satellites, confirming the relativistic prediction.[89] Also the Mars Global Surveyor probe around Mars has been used.[90][91]

Astrophysical applications[edit]

Gravitational lensing[edit]

Main article: Gravitational lensing

Gravitational lensing has developed into a tool of observational astronomy. It is used to detect the presence and distribution of dark matter, provide a "natural telescope" for observing distant galaxies, and to obtain an independent estimate of the Hubble constant. Statistical evaluations of lensing data provide valuable insight into the structural evolution of galaxies.[97]

Gravitational wave astronomy[edit]

Main articles: Gravitational wave and Gravitational wave astronomy

Observations of gravitational waves promise to complement observations in the electromagnetic spectrum.[103] They are expected to yield information about black holes and other dense objects such as neutron stars and white dwarfs, about certain kinds of supernova implosions, and about processes in the very early universe, including the signature of certain types of hypothetical cosmic string.[104]

Black holes and other compact objects[edit]

Main article: Black hole

Whenever the ratio of an object's mass to its radius becomes

sufficiently large, general relativity predicts the formation of a black

hole, a region of space from which nothing, not even light, can escape.

In the currently accepted models of stellar evolution, neutron stars of around 1.4 solar masses, and stellar black holes with a few to a few dozen solar masses, are thought to be the final state for the evolution of massive stars.[105] Usually a galaxy has one supermassive black hole with a few million to a few billion solar masses in its center,[106] and its presence is thought to have played an important role in the formation of the galaxy and larger cosmic structures.[107]Black holes are also sought-after targets in the search for gravitational waves (cf. Gravitational waves, above). Merging black hole binaries should lead to some of the strongest gravitational wave signals reaching detectors here on Earth, and the phase directly before the merger ("chirp") could be used as a "standard candle" to deduce the distance to the merger events–and hence serve as a probe of cosmic expansion at large distances.[113] The gravitational waves produced as a stellar black hole plunges into a supermassive one should provide direct information about the supermassive black hole's geometry.[114]

Cosmology[edit]

This blue horseshoe is a distant galaxy that has been magnified and

warped into a nearly complete ring by the strong gravitational pull of

the massive foreground luminous red galaxy.

Main article: Physical cosmology

is the spacetime metric.[115] Isotropic and homogeneous solutions of these enhanced equations, the Friedmann–Lemaître–Robertson–Walker solutions,[116] allow physicists to model a universe that has evolved over the past 14 billion years from a hot, early Big Bang phase.[117] Once a small number of parameters (for example the universe's mean matter density) have been fixed by astronomical observation,[118] further observational data can be used to put the models to the test.[119] Predictions, all successful, include the initial abundance of chemical elements formed in a period of primordial nucleosynthesis,[120] the large-scale structure of the universe,[121] and the existence and properties of a "thermal echo" from the early cosmos, the cosmic background radiation.[122]

is the spacetime metric.[115] Isotropic and homogeneous solutions of these enhanced equations, the Friedmann–Lemaître–Robertson–Walker solutions,[116] allow physicists to model a universe that has evolved over the past 14 billion years from a hot, early Big Bang phase.[117] Once a small number of parameters (for example the universe's mean matter density) have been fixed by astronomical observation,[118] further observational data can be used to put the models to the test.[119] Predictions, all successful, include the initial abundance of chemical elements formed in a period of primordial nucleosynthesis,[120] the large-scale structure of the universe,[121] and the existence and properties of a "thermal echo" from the early cosmos, the cosmic background radiation.[122]Astronomical observations of the cosmological expansion rate allow the total amount of matter in the universe to be estimated, although the nature of that matter remains mysterious in part. About 90% of all matter appears to be so-called dark matter, which has mass (or, equivalently, gravitational influence), but does not interact electromagnetically and, hence, cannot be observed directly.[123] There is no generally accepted description of this new kind of matter, within the framework of known particle physics[124] or otherwise.[125] Observational evidence from redshift surveys of distant supernovae and measurements of the cosmic background radiation also show that the evolution of our universe is significantly influenced by a cosmological constant resulting in an acceleration of cosmic expansion or, equivalently, by a form of energy with an unusual equation of state, known as dark energy, the nature of which remains unclear.[126]

A so-called inflationary phase,[127] an additional phase of strongly accelerated expansion at cosmic times of around

seconds, was hypothesized in 1980 to account for several puzzling

observations that were unexplained by classical cosmological models,

such as the nearly perfect homogeneity of the cosmic background

radiation.[128] Recent measurements of the cosmic background radiation have resulted in the first evidence for this scenario.[129] However, there is a bewildering variety of possible inflationary scenarios, which cannot be restricted by current observations.[130]

An even larger question is the physics of the earliest universe, prior

to the inflationary phase and close to where the classical models

predict the big bang singularity. An authoritative answer would require a complete theory of quantum gravity, which has not yet been developed[131] (cf. the section on quantum gravity, below).

seconds, was hypothesized in 1980 to account for several puzzling

observations that were unexplained by classical cosmological models,

such as the nearly perfect homogeneity of the cosmic background

radiation.[128] Recent measurements of the cosmic background radiation have resulted in the first evidence for this scenario.[129] However, there is a bewildering variety of possible inflationary scenarios, which cannot be restricted by current observations.[130]

An even larger question is the physics of the earliest universe, prior

to the inflationary phase and close to where the classical models

predict the big bang singularity. An authoritative answer would require a complete theory of quantum gravity, which has not yet been developed[131] (cf. the section on quantum gravity, below).Time travel[edit]

Kurt Gödel showed that closed timelike curve solutions to Einstein's equations exist which allow for loops in time. The solutions require extreme physical conditions unlikely ever to occur in practice, and it remains an open question whether further laws of physics will eliminate them completely. Since then other—similarly impractical—GR solutions containing CTCs have been found, such as the Tipler cylinder and traversable wormholes.Advanced concepts[edit]

Causal structure and global geometry[edit]

Main article: Causal structure

Aware of the importance of causal structure, Roger Penrose and others developed what is known as global geometry. In global geometry, the object of study is not one particular solution (or family of solutions) to Einstein's equations. Rather, relations that hold true for all geodesics, such as the Raychaudhuri equation, and additional non-specific assumptions about the nature of matter (usually in the form of so-called energy conditions) are used to derive general results.[133]

Horizons[edit]

Using global geometry, some spacetimes can be shown to contain boundaries called horizons, which demarcate one region from the rest of spacetime. The best-known examples are black holes: if mass is compressed into a sufficiently compact region of space (as specified in the hoop conjecture, the relevant length scale is the Schwarzschild radius[134]), no light from inside can escape to the outside. Since no object can overtake a light pulse, all interior matter is imprisoned as well. Passage from the exterior to the interior is still possible, showing that the boundary, the black hole's horizon, is not a physical barrier.[135]

The ergosphere of a rotating black hole, which plays a key role when it comes to extracting energy from such a black hole

Even more remarkably, there is a general set of laws known as black hole mechanics, which is analogous to the laws of thermodynamics. For instance, by the second law of black hole mechanics, the area of the event horizon of a general black hole will never decrease with time, analogous to the entropy of a thermodynamic system. This limits the energy that can be extracted by classical means from a rotating black hole (e.g. by the Penrose process).[137] There is strong evidence that the laws of black hole mechanics are, in fact, a subset of the laws of thermodynamics, and that the black hole area is proportional to its entropy.[138] This leads to a modification of the original laws of black hole mechanics: for instance, as the second law of black hole mechanics becomes part of the second law of thermodynamics, it is possible for black hole area to decrease—as long as other processes ensure that, overall, entropy increases. As thermodynamical objects with non-zero temperature, black holes should emit thermal radiation. Semi-classical calculations indicate that indeed they do, with the surface gravity playing the role of temperature in Planck's law. This radiation is known as Hawking radiation (cf. the quantum theory section, below).[139]

There are other types of horizons. In an expanding universe, an observer may find that some regions of the past cannot be observed ("particle horizon"), and some regions of the future cannot be influenced (event horizon).[140] Even in flat Minkowski space, when described by an accelerated observer (Rindler space), there will be horizons associated with a semi-classical radiation known as Unruh radiation.[141]

Singularities[edit]

Main article: Spacetime singularity

Another general feature of general relativity is the appearance of

spacetime boundaries known as singularities. Spacetime can be explored

by following up on timelike and lightlike geodesics—all possible ways

that light and particles in free fall can travel. But some solutions of

Einstein's equations have "ragged edges"—regions known as spacetime singularities,

where the paths of light and falling particles come to an abrupt end,

and geometry becomes ill-defined. In the more interesting cases, these

are "curvature singularities", where geometrical quantities

characterizing spacetime curvature, such as the Ricci scalar, take on infinite values.[142] Well-known examples of spacetimes with future singularities—where worldlines end—are the Schwarzschild solution, which describes a singularity inside an eternal static black hole,[143] or the Kerr solution with its ring-shaped singularity inside an eternal rotating black hole.[144] The Friedmann–Lemaître–Robertson–Walker solutions and other spacetimes describing universes have past singularities on which worldlines begin, namely Big Bang singularities, and some have future singularities (Big Crunch) as well.[145]Given that these examples are all highly symmetric—and thus simplified—it is tempting to conclude that the occurrence of singularities is an artifact of idealization.[146] The famous singularity theorems, proved using the methods of global geometry, say otherwise: singularities are a generic feature of general relativity, and unavoidable once the collapse of an object with realistic matter properties has proceeded beyond a certain stage[147] and also at the beginning of a wide class of expanding universes.[148] However, the theorems say little about the properties of singularities, and much of current research is devoted to characterizing these entities' generic structure (hypothesized e.g. by the so-called BKL conjecture).[149] The cosmic censorship hypothesis states that all realistic future singularities (no perfect symmetries, matter with realistic properties) are safely hidden away behind a horizon, and thus invisible to all distant observers. While no formal proof yet exists, numerical simulations offer supporting evidence of its validity.[150]

Evolution equations[edit]

Main article: Initial value formulation (general relativity)

Each solution of Einstein's equation

encompasses the whole history of a universe — it is not just some

snapshot of how things are, but a whole, possibly matter-filled,

spacetime. It describes the state of matter and geometry everywhere and