Scientific Method / Science & Exploration

http://arstechnica.com/

Short answer: Yes, but it's more complicated than that.

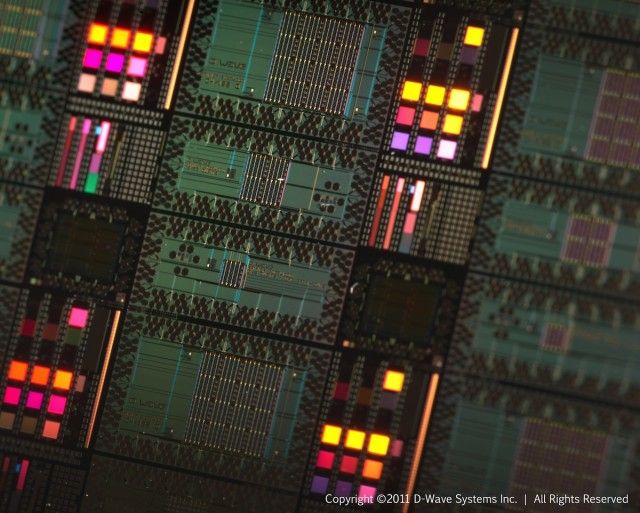

One of D-Wave's chips, circa 2011.

D-Wave

Back in November, D-Wave issued a press release that basically asked tech journalists to spray paint a finish line just behind their feet and break out a victory flag. It seemed a bit much. But now that D-Wave has declared victory, perhaps it's time to re-examine the skepticism. What exactly has D-Wave achieved, and does it constitute victory? Either way, where are the company's efforts focused now?

Of course the best way to judge D-Wave is not by its press releases nor by the specifications and benchmarks glued on the boxes of its processors—these should be treated with utmost paranoid suspicion. Instead, it's better to look at what researchers who have access to D-Wave hardware are claiming in publications. And despite my suspicions, the paper accompanying that last press release—plus a couple of other papers on the arXiV that were released earlier—is interesting. All together, they paint a picture that says we should finally be cautiously optimistic about D-Wave's progress.

A brief history of D-Wave's time

If you are unfamiliar with what D-Wave does and its history, you could easily read several other articles before continuing. The TLDR is as follows: a long time ago, in a country far far away (Canada), a little start-up company announced a 16-bit quantum computer. This surprised everyone, considering that a lot of researchers were previously very proud of achieving one or two bits. To muddy the waters even further, the alleged quantum computer used an entirely different approach from everyone else's attempts, one called adiabatic quantum computing.In adiabatic quantum computing, one does not directly perform operations on individual bits or groups of bits. This is unlike circuit quantum computers, where there are single operations such as a CNOT (controlled not, the fundamental logic operation in quantum computing). Instead, the solution to a problem here is re-configured so that it is the ground state of an energy landscape.

Think of it like this: in an energy landscape shaped like a bowl, a particle can sit at the bottom of the bowl, it can be sloshing back and forth up the sides of the bowl, or it can be anywhere in between. The ground state is one that involves the particle sitting at the bottom of the bowl. For a bowl, this is easy to figure out. But for an arbitrary landscape with multiple particles, the ground state is not easy to determine in advance. So even though we know that our desired solution is the ground state of some energy landscape, we cannot conveniently calculate what that is. Therefore, we still cannot efficiently find a solution.

This is where things get clever for D-Wave. Instead of starting at the desired landscape, the company starts with the bowl and puts all the particles in the ground state of the bowl. Next, it slowly and carefully deforms the bowl to the more complicated landscape we care about (this is called an adiabatic process, hence the name adiabatic quantum computer). If it's done carefully, the particles stay in the ground state—and at the end of the transformation, we have the solution.

Afterward, to get the answer, we simply read out the state of all the particles. Job done.

Where's the quantum at?

As described, this is a purely classical process. The bits may be quantum entities, but you could also think of them as tiny magnets flipping up and down—no quantumness required. The quantum part comes in because D-Wave's hardware relies on quantum tunneling. In a complex landscape, the quantum bits (qubits) may get trapped in an energy minimum that is not actually the solution. (It's a local minimum but not the absolute minimum in the landscape.) Classically, the qubits would have to heat up and climb out of the minimum to reach a deeper minimum. Quantum particles, however, can tunnel through such barriers.The difference is that first one qubit would have to flip its state (increasing the energy), followed by another and another, each increasing the energy. This means that in cases where several neighboring qubits have to change states to reach a lower energy, the new state might take the age of the Universe to occur if there is no quantum tunneling. If tunneling's available, on the other hand, it could happen very quickly.

(For high-but-thin barriers, tunneling is quite likely. However, as the barrier width increases, it gets less likely. D-Wave must rely on keeping energy barriers thin.)

Naturally, there's a catch. If the same process must occur between qubits that are only weakly coupled (e.g., via other qubits), then quantum tunneling becomes a very improbable process and offers little to no advantage in exiting the local minimum.

D-Wave's computer relies on its hardware physically undergoing this tunneling process. For those of you who want to play along at home, however, you can actually do the equivalent of all this on an ordinary computer. The process is called simulated annealing (as opposed to the real physical annealing that D-Wave does), and it simply relies on math that models the physical system. You can even simulate quantum annealing, which is the digital analog of what D-Wave is trying to show its computer really doing.

Do we hate annealing?

D-Wave may use a different quantum process than other efforts, but its processor's operation is not a fundamental difference between it and other researchers. Instead, it is D-Wave's approach to the problem that truly sets the company apart.The traditional approach is what I think of as "sensible-physics, zero-engineering." That is, at every step in the process, the scientists involved can accurately say they are sure that their bits are in fact quantum in nature—they are qubits. These scientists measure coherence times, they verify entanglement between qubits, and they perform toy computations to show that it all works as expected.

But there is little to no discussion of scaling the number of qubits up to something substantial and useful. Integration between a quantum computer with an ordinary computer is left as an exercise for the reader.

D-Wave, on the other hand, has gone for "sensible engineering, risky physics." First, they chose a technology that they knew could scale. Their qubits are based on superconducting quantum interference devices (SQUIDs). SQUIDs can be printed on circuit boards using photolithography; other people's research has shown they are coherent and can be entangled. These properties do not come automatically, though, so you might want to spend some time confirming that entanglement happens in your hardware.

However, instead of pursuing confirmation of quantum-ness, D-Wave focused on the engineering: drive up the number of qubits, make sure that their system integrates nicely with off-the-shelf computers, and optimize the annealing process so that computations can be performed as quickly as possible. This is sensible engineering, and the company has proved to be adept at it. D-Wave produced a co-processor that performs physical annealing on a remarkably high number of bits quickly and simply. We should all be in awe of that achievement.

The risk lurks in the physics, however. D-Wave didn't really know if the SQUIDs were behaving as classical bits or as qubits. To be fair, this is not an easy measurement to make—I am not sure how you would directly confirm entanglement between ten or more qubits, for instance.

Instead of doing those measurements, D-Wave chose a less direct route, what you might call performance-based validation. Its argument goes as follows: if we know that a particular problem scales one way on a classical computer and another way on a quantum computer, we can compare scaling by solving problems of different sizes and see what happens.

To give a simple (and fake) example: the problem of counting all the eggs in a single basket scales linearly with the number of eggs on a quantum computer; on a classical computer it scales exponentially. For zero eggs, both computers perform the same. At one egg, the quantum computer is twice as fast. By the time we get to 10 eggs, the quantum computer is 2,000 times faster. So, 10 eggs would make the performance validation clear.

In this analogy, D-Wave's device is only capable of solving the egg-counting problem for up to three eggs, where the optimum speed-up is a factor of seven. With this level of difference, the devil is in the implementation details—how the algorithm is actually handled—on both computers. Even if you measure a factor of seven, you can't be sure what that factor actually means.

Nevertheless, this has proven to be a fruitful and practical approach for D-Wave. The scaling measurements show behavior that looks more like a quantum computer than a classical computer. Unfortunately, for every demonstration so far, it has been possible to find a classical implementation that cheats and beats the D-Wave processor.

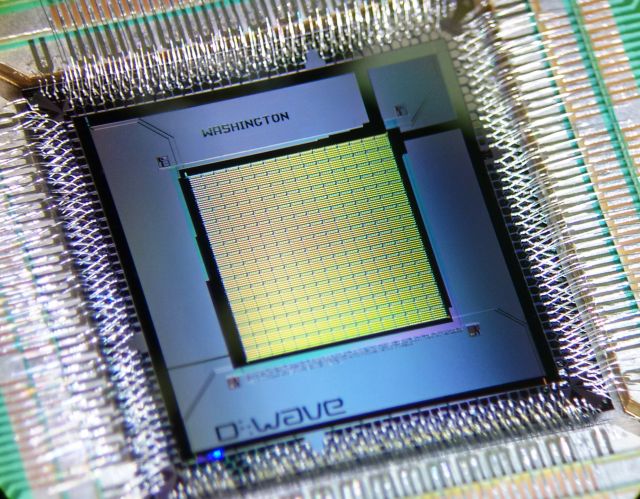

D-Wave

We found the quantum

But late in 2014, Google, D-Wave, NASA, and Michigan State University scientists showed that, yes, the D-Wave processor does exhibit tunneling.At least locally, but what is meant by locally? This comes down to the structure of the D-Wave processor. Ideally for simulated annealing to work for all problems, every qubit should be connected to every other qubit. For a 1,000-qubit board, that represents a substantial challenge. To avoid this, the qubits are grouped into sets of eight. Within a group, each qubit is connected to four other qubits. For two neighboring groups of eight, four qubits from each group are connected pair-wise to each other. Effectively, each group of eight is strongly coupled to each other, while qubits from different groups are weakly coupled. To demonstrate tunneling within the strongly coupled groups, researchers set up a problem involving just two groups. The true minimum energy solution involves all spins in both groups pointing in the same direction (e.g., 16 ones or 16 zeros, depending on how you define one and zero). A second solution is a local minimum, consisting of one group of spins pointing up and the other pointing down.

Once in the local minimum, it is very difficult for a classical annealer to exit because eight bits must be flipped, and the first seven flips increase the energy of the group. This is statistically highly unlikely. But if tunneling is allowed, all eight bits can flip simultaneously, passing through that high potential barrier rather than going over it.

In effect, at high temperature, a classical annealing process can exit the local minimum because the thermal energy is enough to punt the bits over the barrier. But at low temperature, there is insufficient energy, so it always falls back to the local minimum. Similarly, at high temperature, tunneling should be destroyed by noise, so it shouldn't help. At low temperature, it should enable the annealer to avoid the wrong solution.

This is exactly what the researchers observed. A closer examination since then has only strengthened the case. To summarize the findings, the D-Wave processor does show evidence of using quantum tunneling in densely interconnected regions. That's great, but there is no evidence yet for tunneling between weakly connected regions.

Are short tunnels enough?

Tunneling within the local group works and is effective, while tunneling between groups is a less certain proposition. This is the crux of the latest paper: does this limitation matter? Do you get a speed-up thanks to local tunneling, even if it isn't the full speed-up expected from a purely quantum computer? In other words, D-Wave has tried to determine whether the limited interconnectivity will kill off the supposed computational advantage.Excuse a small spoiler, but the answer is complicated—while the recent paper is suggestive, it doesn't really answer the question definitively.

The approach that the team took is pretty much the same as previous papers on performance: set up a problem and compare scaling between various forms of simulated annealing on a normal computer and physical annealing on a D-Wave chip. In this case the problem chosen was... simulating the D-Wave architecture. That is, given the interconnectivity of the qubits, what is the energy minimum? Naturally, the D-Wave machine is pretty well optimized for this problem. In fact, it even exploits the local tunneling to maximum effect, as flipping all bits simultaneously (tunneling) is much more probable than the classical process of flipping each qubit individually.

And for this problem, the paper describes two important results. First, the D-Wave processor is much faster than the two classical algorithms that it was compared to. And that speed-up is about 108—a very impressive result.

But... there are two buts here. The first is that the scaling is no better than the best classical algorithm (quantum Monte Carlo). So the speed-up is a constant factor, and there's no sign of scaling. The second point is that the D-Wave processor might be even faster for smaller problem sets. This is because the physical annealing process has a minimum time (set in the control software) that may well be longer than the actual time required.

This good news may, in fact, be bad news. Why? Well, if the D-Wave machine is faster than it appears for small problems, then instead of a constant difference between it and the classical algorithm (they scale the same, but D-Wave is always faster), the difference gets smaller as the problem size increases rather than larger as D-Wave would hope to observe. In this case, the scaling looks more like the worst performing classical algorithm (simulated annealing), rather than the best. That is not a pretty picture.

There is yet another caveat in the results. The general simulated annealing routine can be tweaked if you know what sort of problem you are solving. One of those tweaks, for instance, is to first search for clusters of strongly connected qubits that are weakly connected to neighboring clusters. These clusters are then replaced by a single pseudo-bit. This process automatically avoids the local minimum of a cluster assuming the wrong orientation.

This tweaked classical algorithm spanks the D-Wave processor.

Is it all that bad?

Given this mixture of results, can we draw any conclusions? The main one is that the D-Wave processor simulates the D-Wave processor very efficiently. This is better news than it sounds. After all, if it can't do that, it can't be expected to do anything else very well. A secondary conclusion is that the D-Wave processor is starting to show some promise—there are clearly some quantum effects at play.That conclusion, however, comes with a big if. The cost of mapping a problem to the D-Wave architecture cannot be too high, both in terms of creating the map and later pulling a result out of it. Otherwise, these speed gains will be wiped out just by operating the hardware. That if is pretty big.

There's also a less welcome conclusion from D-Wave's perspective. Although local quantum tunneling has been confirmed, it looks like the helpfulness of local tunneling is limited. So far, it offers a constant factor speed-up over a conventional computer, but it does not offer better scaling.

This has its own caveats, however. We cannot say that the scaling at small problem sizes eliminates the chance of better scaling at larger problem sizes, because the scaling in these results may be dominated by implementation details rather than the physical annealing process. To put it simply, we still can't really predict how D-Wave's processor is going to perform for really large problems.

The final conclusion seems to be that the architecture of the processor itself is starting to limit its usefulness. This is apparent to D-Wave engineers as well, because they are already planning a next generation that has a different architecture, rather than increasing the number of qubits. The engineers are hoping to design a new, improved D-Wave processor that has the connectivity of a ten-dimensional cubic lattice. Although details are limited, it seems that the plan is to use highly interconnected clusters that have more limited connectivity between clusters. The advantage is that the increased connection density within clusters—and the increasing connectedness between clusters—should allow larger problem sets to be more efficiently implemented. This is highly promising.

Given how pessimistic I was about D-Wave way back in 2007, I have to admit the company has come much further than anyone reasonably expected them to. D-Wave has demonstrated some very elegant engineering, and I am actually hopeful that they can pull off this more challenging architecture. And with that much in place, it may turn out that they can match some of their eye-popping, earlier claims after all.

No comments:

Post a Comment